Audio-Driven Animation

FractalDimensia

Posts: 0

FractalDimensia

Posts: 0

I mentioned some months ago in a thread that I had two interests in using PyCarrara - one has evolved into PySwarm. I thought I would share my first exploration into my other interest - using audio to drive Carrara scene animation.

This first test is a "proof of concept" to study how to use audio files and PyCarrara to drive objects, cameras, and lights in a Carrara animation. While PyCarrara comes with a way to manipulate objects using MIDI files, this study is on other formats - e.g., WAV and MP3.

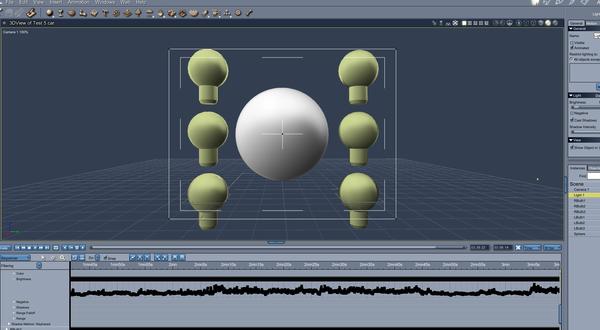

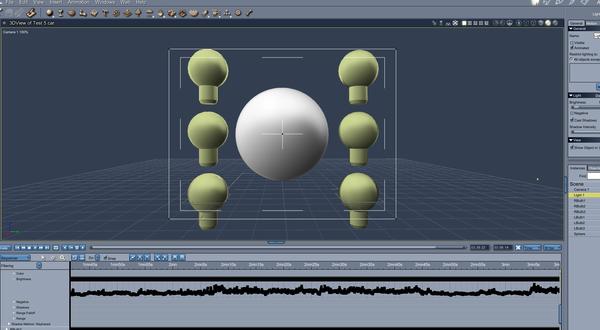

In this basic scene, the intensity of 6 lights positioned around a ball (3 on left, 3 on right) is driven by an MP3 audio file (see screenshot below), with some pre-processing required upfront. Data is split into low, mid, and high range for left and right channels (for 6 streams of data).

https://www.youtube.com/watch?v=D4oZ0UZeGig

The kinds of animation possible are quite extensive.

Objects - moved, scaled, and rotated (+altering effects on casting and receiving shadows, visible)

Lights - moved, scaled, and rotated (+altering effects on casting and receiving shadows, color, brightness, falloff, and range)

Shaders - Any channel can be altered (to some extent)

I'll post more as I experiment with this idea.

I am also looking into ways to integrate this with PySwarm, by using audio data to alter some PySwarm parameters.

Comments

now this I really interested in, just no maths/scripty brain

Very well!

I had tried to demystify these scripts, but for some samples files which you had provided, does I did not find them… that really function with MIDI data or with the sound .wav produces by the synthetizer of your sound card ?

I as noticed as the files .wav 16bits/48Khz are not accepted in Carrara, for the 16/44,1, it is OK…

Wendy, I remember a post you did some time ago about this topic. And I suspect a few others are interested in this.

Like most PyCarrara work, it gets technical quickly. I would still like to share what I'm learning, so I'll need to consider how to share this in a way to make it useful for others.

Thanks.

FD

yes unfortunately the reason I do not use a lot of this stuff,

have f1oat and Brian Orca's stuff too the particles and ocean, both too complex for a simple wannabe artist like me

bought inagoni plugins that really leave me baffled too! though have done a bit of random fiddling.

I play music on my synthesizer and can do midi tracks but the most I can do so far is stick it in mimic and make phoneme driven things or casuals wav amplitude audiomation script using fenrics erc plugin like displacement values colour mixing or translations.

would like to do more specific stuff.

The source is a MP3 file, so there is no midi involved. Also, the music was integrated into the final video AFTER it was rendered in Carrara. I have never tried loading audio into Carrara, as i encountered problems the first time i tried.

The process i used involves converting the raw audio data into a long sequence of numbers. I then wrote a short python script that reads this data and changes each bulb's brightness based on the values. It took about 4 hours to produce this video.

All useful information Wendy.

Maybe there is a simple way to import MP3 and WAV files to manipulate objects. When you have a chance, could you describe how you would use sound in animation (move, scale, objects? Change shader channels?)? I'll try to put something together for you to try out when I can get to it.

FD

there was this attempt http://www.youtube.com/watch?v=WnIP3G874a4

description

Published on Mar 2, 2013

silly mucking around with RA Mowg and Audacity, wish I had a 3D spectrograph program but cannot find any, using mimic plugin in Carrara and morphs on a plane not quite the same thing, am I the only one who it has occurred to that saving a wav file as an animated 3D object would be kinda cool?

Category

Music

I since did a spectrograph program btw!

and Audacity outputs values for music files too

here http://www.youtube.com/watch?v=2EfCkXKSlrw

http://www.youtube.com/watch?v=MMNVSlA6bUY

I used Casuals script [url=thttp://www.daz3d.com/forums/discussion/8917/]here to create the transform values for the displacement in that plane

Step 2 in writing a Python script.

https://www.youtube.com/watch?v=1SLa7n5CHv8

While this "Spectrograph" demo is very simple, there is a lot of code behind it that I have not demo'ed yet. More in the next demo....

Thought to share this link here for anyone looking for inspiration on sound / animation projects: http://www.animusic.com/company/software.php

Their youtube channel: http://www.animusic.com/company/software.php

I stumbled upon a clip from animusic 1 and was simply bowled over.

Would be great to see people doing things like this in Carrara soon, elbow grease to all those leading the charge now....

Wow!

Both links above go to the same page, but you can find preview clips of the songs from the Preview menu.

Really really cool! I have to get these DVDs!

The music is great and the band is spot on! Love it, Love It!!!

Thank you, dreamarts.designagency, for sharing this!

Glad you liked it Dartanbeck. Had intended to share the youtube channel as well: https://www.youtube.com/user/AnimusicLLC

P.S. just got my screen name changed to something less of a mouthful....newbie issues!

Wow! I just watched them all... Very cool. I just put in this playlist, which includes everything up there except for the Resonant Chamber - Director's commentary.

I really enjoy this and will definitely be supporting them and buying the DVDs. Just too cool not to!

Very good. But can something like this be done with PyCarrara?

Most likely.

But something this complex certainly would not be plug-n-play, to be sure. Like the fellow says, three years for the first one (7 songs), and so it should be fast for the next one. But that took three years too. So he began to write new code to try and get the workflow faster. No simple process here.

Still, PyCarrara has the ability to work with sound, so looking at the example for it would be a great place to start.

Animusic sure utilizes some heavy duty animation work, though. Just amazing!

Especially after

A - reading what FD says in the first post of this thread

B - what FD's been able to accomplish with PySwarm

I really think that this sort of thing could happen.

But then also consider what Frederick Ribble has done in both PyCarrara and PyCloid, we could even use some elements of the animation as particles, and using PyCloid, just off-the-top thinking....

Now for even more food for thought:

What about using PyCarrara to control various portions of an control object, which guides the motions of an ERC modifier, using Fenric's amazing ERC for Carrara?

Seeing what faba did with a simple controller for a hand shows enormous potential for utilizing that as an option.

The main thing being to get the motions that appear too be making the sounds interacting properly with the music or sound in the scene. From there, further animations can be applied after, like traveling around, dancing, etc.,

It has to go through midi. A midi file (= text file) has the individual instruments played note by note. Even hard and soft playing is included in a midi file. You cannot do this with an audio file (wav, mp3). This has only limited options.

With Carrara we also have the option of using target helpers for controlling. An arm can be easier moved around.

Not sure if this is possible, but it would be very helpful, if NLA could be triggered by midi / PyCarrara.

I am not very technical and busy doing other things, but one day I am going to dive into this. Probably have to study Python first.

It was seeing Animusic's videos that got me going several years back into this idea. I really loved their early animations. But I wanted it to work with WAV/MP3 files because the library of good MIDI source files is just not that extensive.

After the small tests that I have conducted so far using Python's add-on sound packages, I believe it is possible to get very close to a note-by-note translation of WAV/MP3 files through Python. I have just not had time to do much digging into this lately, but can say that my early tests work well.

If you examine Frederic's "spectro-fountain":

https://www.youtube.com/watch?v=3DK-Ee7rB1Q

You'll see the level of granularity is quite fine. Having looked at his source code, he created about 100 different emitters, each operating at a different frequency range. That's nearly 1 emitter per note.

Most of the Python coding challenge is in linking specific notes to Carrara actions (or reactions).

you mean like this -

http://youtu.be/7HsTqUhB3HU

FractalDimensia, do a search for "midi files" and you will find plenty. You have very limited options with wav / mp3. Probably you can recognize the beat, increase in volume and maybe some notes. But if you have a sound file with a guitar, a piano, strings and a drum, there is no way animation can respond to the piano notes only. It is impossible to do the things from bigh's video clip.

Bigh, this is what I had in mind for doing in Carrara.

I guess it all depends on your frame of reference.

While I have "Band In A Box," and other music creation apps, and while I have an intent of someday writing my own music and saving files in MIDI format at the same time of creating realistic sounds AND driving animation, I do have an intent of also creating animations from existing music - e.g., making music videos from existing music that is in MP3/WAV format, not in MIDI.

There are some apps out there that are currently making headway in being able to separate different instruments from an MP3 and creating MIDIs with separate tracks/channels, but they are still mostly experimental. So I fully appreciate the limitation of MP3 animation.

However, my biggest issue with MIDI is that unless you're willing to spend US$500-1000 (minimum), you aren't going to get good quality sound from a MIDI file. If you're going for channel-based note-by-note animation, then yep, MIDI is the way to go. This is just not the only form of animation. :)

I don't agree. A midi file is a text file without any sound. The sound is created by linking instruments. If your midi file sounds bad, it means it is linked to bad sounding mp3 (?) files. Very often they squeeze the bit rate to get a smaller file.

Because you have BIAB, it is very easy. Import the midi file and change the instruments. BIAB itself has some decent ones. Only a good ear can tell the difference. If you want better, try to find better sample sound files. There are several free ones.

The protocol MIDI (Musical DIGITAL Instrument Interfaces) does not produce any sounds but is intended to exchange informations with a synthetizer hardware or software.

Basic MIDI is universal (General MIDI),

it is envisaged 127 “Program Changes” but all not used, to control volume, the modulation, effects etc…

There are also Control Changes and other exchanged data.

The marks have as protocols more advanced, XG for Yamaha, GS for Roland…

To read a MIDI file, the investment can be null: AUDACITY, is an excellent free sequencer, but Windows can play Standart MIDI Files with the instruments of your soundcard its which has certainly one or two built-in banks.

Sci-Fi FUNK just like me, is musician and could also speak to you about it and that could be long!

I don't agree. A midi file is a text file without any sound. The sound is created by linking instruments. If your midi file sounds bad, it means it is linked to bad sounding mp3 (?) files. Very often they squeeze the bit rate to get a smaller file.

Because you have BIAB, it is very easy. Import the midi file and change the instruments. BIAB itself has some decent ones. Only a good ear can tell the difference. If you want better, try to find better sample sound files. There are several free ones.

This is what I mean by it being just a matter of perspective. It is not about agreeing or disagreeing. I understand your point of view; I just have a different one. For me, MIDI sound just doesn't cut it. But, hey, I still like listening to my old vinyl jazz and blues records with my old Infinity Reference Standard speakers, which IMHO ranks second only behind listening live.

Sound produced via a MIDI file is synthesized. Period. Even if the synthetic music generated from MIDI notes originated from a "live" instrument (such as what BiaB does), it is still synthesized. (Of course, I'm ignoring synth instruments here, and am referring to instruments you would find in most orchestras, rock groups, bands, etc. ... And yes, synths are sometimes in these.)

If you are not using a high-end synth sound production system that produces realistic sound effects (attack, crescendos, etc.), it is easy for anyone to tell the difference between live and synthesized. BIAB isn't bad; it's better than most I've tried. But it's just not great either. Even quality synth productions sound false, as it is nearly impossible to achieve the acoustic dynamics of sound rooms (e.g., symphony halls). Yes, I know these can be programmed into a sound editor to recreate (synthesize) these effects. But they just aren't the same.

Also, a MIDI file is not a text file; it has its own file format. Along with a header which provides general information (#tracks, tempo, etc.), instruments are separated into chunks and tracks, with notes (called events) providing all the information about that instrument - e.g., when a note begins and ends. There's very little text in a MIDI file.

Finally, while MIDI files can synthesize most instruments (though not very well IMHO), they cannot reproduce the human voice. If you wanted to use a sound track that contained a human voice (or real human choir), MIDI won't get you there. MP3/WAV or some other audio format that can be used to reproduce such sounds is required.

There are samplers which can loads .wav, but there are also instruments which are based on sampled and buckled real waves.

Native Instrument and some others arrives near to the perfection !

Adding this, to make it more clearer.

You have to kinds. Synthesized and the real thing. This one are recordings of each note of real instruments, like a grand piano. You cannot hear the difference, because it is the real thing. After that, you probably have to do some mixing and mastering. But that is different story.

I agree on solo voices, but for choirs we also have the real thing. Withe these every letter is recorded individually. By typing "music" the choir sings this word.

https://www.youtube.com/watch?v=u2ClJqydAUs

Right.

Having the ability to use other file formats can only make the whole system more powerful, IMHO. While MIDI would be the obvious answer for making things like Animusic and the above Blender one. But that shouldn't diminish FD's urgency for going for uses with other sound files. I think it's cool.

Arguments about midi vs real could carry on indefinitely since you can use real to enhance midi and the other way around.

With the ability to individually control each thing separately vie midi information just seems like the easiest way to go if you're looking for full automation. I also like the stuff that FD was doing with his stuff. We all have different drives and needs. It's cool that we can do any of this at all :)

FD,

this is really so cool what you are currently working on! I am excited that you are working on this topic currently! I've seen your two Tests and this is exactly what I was missing the last weeks during the creation of my first Musicvideo. I missed the possibility to drive rendering via WAV/MP3 so much! At the end I decided to use external tools to visualize my Music. But seeing your demos, I think the Block Spectrum Analysis as used in my Video becomes possible in Carrara! This opens up so many posibilites for innovative Musicclip creation with Cararra!

Will you include this stuff in PySwarm? Or do you share somewhere the scripts and workflow you use for your youtube videos? Do you think vizualisation like in my video will be possible in Cararra with your scripts? I guess somehow the visibility of the individual blocks has to be turned on and off by the music data.

Here is my first Musicvideo, where I am using the a little bit flat looking Block Spectrum Analyzer:

http://youtu.be/cRitFc-ihCQ

this software looks interesting

http://magicmusicvisuals.com/

dunno if one can integrate it with carrara somehow

Thanks Wendy for sharing that link, I have since played with Magic Music Visuals a bit, it is quite a nifty tool and I will be exploring it some more. Because I have an interest in projection mapping, I was lured to look into Modul8 when I discovered it while browsing through some off Holly Wetcircuits works on here website. Unfortunately Modul8, as powerful a tool as it is, is only available for Mac users. Then I found out Magic Music Visuals (affordable) can be paired with VPT (free) for projection mapping. So MMV +VPT might just be the cool affordable yet productive way to go for a non Mac user.......more on that later after I actually CONFIRM that to be the case. Meanwhile, I didn't stop by to just yap yap about my excursions in the mysterious world of VJ-ing and projection mapping, I found this pretty neat and cool example of audio driven animation done in Cinema 4D and it got me thinking about this thread and wondering how FractalDimensia and others can be lured back here to have mercy on those of us in Carrara land by finishing this quest.

(click here)