The Shader Mixer Deciphered (Blender to Daz Conversion)

Like many Daz users, I've tried to avoid the Shader Mixer since Daz's documentation is a little, well...

Anatomy of a Brick (WIP) [Documentation Center] (daz3d.com)

But just now, inspired by a few threads in the Commons, I took another crack at learning it. And this time, lo and behold, I actually understand how it works now.

...sort of.

I'm posting this in the Blender subforum because it's basically a guide for converting Blender shaders to Daz shaders. It presumes you're familiar with how Blender's (much better documented) Shader Editor works.

Essentially, the two shader editors are the same thing, with all the parts named differently.

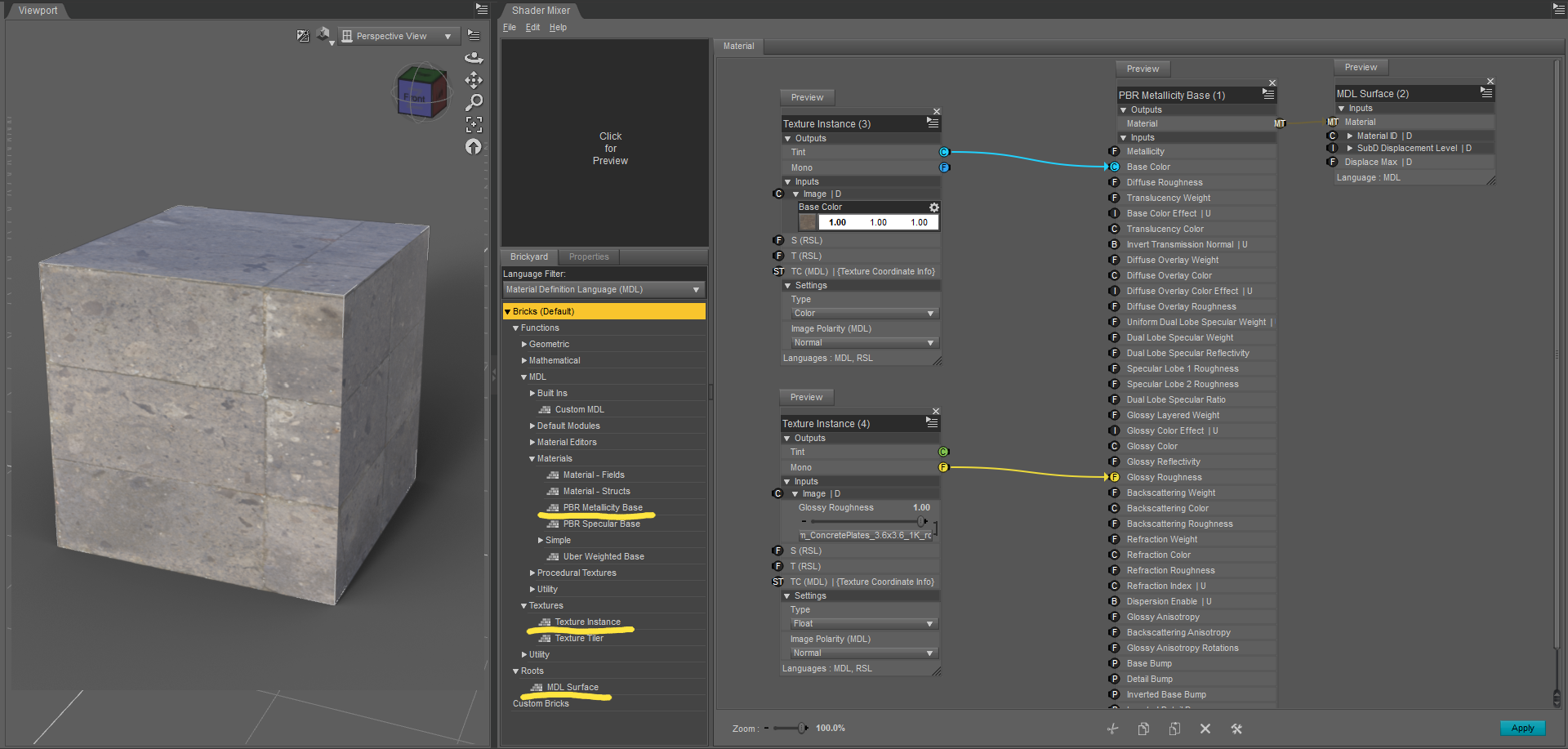

First, make sure you have "Material Definition Language" selected in the combo box atop the lefthand column, if you want to make an Iray shader. Otherwise, it'll only show you the 3Delight nodes.

"MDL Surface" is the same as its counterpart, Material Output, in Blender. You route all of your nodes into that. Unlike Blender, if you want to apply the material in Daz you first need to select the object's surface and hit the "Apply" button in the corner to push the new shader to your object. It doesn't automatically update.

"PBR Metallicity Base" is effectively the Principled BSDF node. You send all of your texture maps into that, and it converts them into a shader. This is a simplified representation of what you see in the surfaces tab.

"Texture Instance" is where your actual texture maps go. You go to the image section and stick your textures in there. At the bottom, there's a combo box that says "Type" and offers "Color" and "Float". This is equivalent to the "sRGB" and "Non-Color Data" drop down in Blender's Image node. Color means you want to display the texture, while Float means you want to use the black and white data for shading, like a roughness map. Depending on which one you choose, the image section will switch between a color picker and a scalar slider. At the very top, there's "Tint" and "Mono", which I believe are equivalent to the "Color" and "Factor" outputs from Blender nodes -- another way of choosing between color information or black and white data.

Best as I can figure, the letters inside the sockets indicate what type of data it expects. (C)olor, (F)loat/(F)actor, (I)nteger, (B)oolean, etc.

That's about all I could figure out after 10-15 minutes of experimentation. I still have no idea how bump/normal maps or texture coordinates are supposed to work, since the nodes that seem like they would affect that...don't.

Nonetheless, now that I've conquered the major hurdle of putting a picture on a thing, I feel a little more confident I'll figure the rest out.

Comments

Well this is interesting. I thought Shader Mixer could only create 3Delight layouts. Thank you for sharing, I will experiment with this soon too.

When I first embarked on this endeavor, I tried to use the Shader Mixer's File Menu to open some shaders and study them. But no matter where I looked, all the folders seemed to be empty.

After a bit more research, I discovered you have to select a shader already in your scene and hit "Import From Scene".

And not gonna lie, when I first laid eyes on it, there were so many tentacles I thought I had a run-in with Cthulhu.

But after some time spent studying diligently--most of it tracing the node connectors--I pieced together how to apply normal and bump maps. You connect the "Texture Instance Normal Map" to the "Base Bump" socket and the "Texture Instance Bump Map" to the "Detail Bump" socket. Even though they have "N" connectors and those two sockets specify "P" (position, I think--basically Daz's equivalent of the Vector socket). Even though I tried that with the cube and it didn't work.

But...whatever.

(Also, it's a bit tricky to load texture maps into those two nodes. Since they have scalar sliders, it doesn't look like there's a slot for textures like the color picker. You need to hit the tiny little triangle to the right of the slider to open the dropdown.)

Anyway, since the default Genesis 8 doesn't have normal maps, I elected to swap his face materials with Diego 8 for some nice contrast.

No Bumpmapping

Bumpmapping

As you can see, it's working. Not particularly well, since it's missing about 800 million nodes from the Uber shader (including the SSS, though I don't really understand how that works in Blender either), but it is working. Most free PBR textures only use these four maps--or five, if there's a metallic map--so this here is pretty much all you need to recreate a Blender shader in Daz Studio.

This, and the patience to trawl through an endless list of undocumented helper nodes looking for the specific one you need.

Happy hunting.

It's almost like they don't want people knowing what their software can actually do or something.

LOL at that ubershader pic - that is pretty much how every node-based system looks to anyone who hasn't used one before. Crazy!

Good work so far. Do you have any idea yet what kinds of bricks might exist here that we otherwise would never be able to use ? I see some generated textures (like brick, Voronoi, etc), but I'm still not sure what we could be missing in our usual Uber and PBR shaders that higher-end programs might have.

Right now, I'm more interested in learning the fundamentals of mixing and masking textures. I want to see if it's faster and more robust than the (slow AF) LIE.

Heh, good idea. LIE is not only slow, but a nightmare if you want to undo it.

I guess the only thing I can think of that I was able to do with Blender shaders that I wish I could in Studio is the ability to use hue/sat, contrast, and other color correction nodes on textures. It makes adjusting grayscale maps much easier, and it was useful for making more subtle changes to textures without having to edit them in 2D software. Hope something similar is buried in Shader Mixer somewhere. :)

You can do those things with math nodes, and the Shader Mixer has more than enough of those.

In addition to the PBR Metallicity node that replicates the Principled BSDF, the Shader Mixer has "simple" materials, replicating the nodes used before the Principled BSDF was added. Diffuse, Glossy, etc. In this example, I just fed a simple diffuse into the MDL Surface to get a nice matte color.

Blend Colors works pretty much the same as MixRGB. You choose the mix type, the factor, and the two colors you want to mix.

Actually setting the blend type was tricky, but what you need to do is--since we can see it accepts an (E)num datatype--create an Enum Value node and connect it to the socket. The Enum Value node will be blank at first, but once you connect it it'll automatically populate with the correct enumeration. In this case, Photoshop-style blending modes.

The Direct Value node is similar to Blender's Value and RGB nodes. You pick the data type from the dropdown at the bottom and then put whatever value you want into the "Input" section. In this example, I'm using one to control the blend color (red) and another to control the blend factor (0.5).

Also, helpful tip: if you hit the options button in the upper right, you can hide unused sockets. This works pretty much the same as using Ctrl-H on a node in Blender. It'll collapse it to save space--and since the Shader Mixer for some baffling reason keeps resizing the space you can work with, you'll need as much as you can get.

Here's my take on how the brickyard is organized:

Functions -> Geometric

Bump, normal, and displacement nodes.

Functions -> Mathematical

A math library, divided into both simple operations (add, multiply, etc.) and complex operations (sin, pow, etc.). In a pinch, you could use these to do some color correction.

Functions -> MDL

The big boy. It seems like this is where all the magic happens.

Functions -> MDL -> Built Ins

Contains BSDF (Bidirectional Scattering Distribution Function), EDF (Emission Distribution Function), and VDF (Volume Distribution Function). In general, these describe how light interacts with the surface or volume. But I don't know how they're meant to be used in Daz, since they don't take inputs.

Functions -> MDL -> Built Ins -> Types

Contains a big list of primitive datatypes, like float, int, etc. It seems like these are used for converting packed datatypes--two floats bundled as one--into separate float values?

Functions -> MDL -> Custom MDL

Allows you to call shaders defined in scripts?

Functions -> MDL -> Default Modules

The most promising section, since it's just a big list of "things you can do with Iray".

Functions -> MDL -> Material Editors

Seems to control the clearcoat/top coat values of a shader. The things that are layered atop your regular textures. Also seems like you can query and control normals and backfaces here, so if you want to make a one-way mirror, this is where you'd do it.

Functions -> MDL -> Materials

Equivalent to Blender's various BSDF nodes. Not only do you have the three uber nodes (Metallicity, Specular, and Weighted) it also has some very simple materials (metal, plastic, glass) that only accept a handful of inputs.

Functions -> MDL -> Procedural Textures

Nodes to blend colors and create Perlin noise and checkerboard patterns. Oddly, it seems like these are duplicated from the Default Modules section.

Functions -> MDL -> Utility

I presume these are the same as the Utility nodes mentioned below, allowing users to input arbitrary data. But aside from Luminance Units, I have no idea what any of them do.

Functions -> Textures

Lets you load images.

Functions -> Utility

These nodes let the user input arbitrary data values to be used by the shader (Direct Value and Enum Value, as mentioned above).

Roots

Just contains the MDL Surface node.

I found this NVIDIA technical document, which seems to outline some of the shaders under Functions -> MDL -> Default Modules -> df. I presume the "df" stands for "distribution function", as in BSDF.

I had another go at understanding the Uber shader. Though it looks daunting (and it is), if you know what you're looking for it's not that hard to puzzle out.

To the left, there's the User Parameters node. Most tutorials on Youtube are ancient and meant for 3Delight shaders, but I managed to find one guy who made an Iray Shader Editor tutorial, with a grand total of...130 views. Per his video, the User Parameters node acts as a frontend. If you plug a node into that, it'll expose that value outside of the Shader Mixer in the surfaces tab--which is how most of us are familiar with adjusting shader values.

Most of the nodes arranged in one great big column in the center are merely texture instance nodes, or specialized normal/bump texture instance nodes. Even though they're called "texture instance" nodes, the default Uber Base shader doesn't contain any textures and just treats these nodes as scalar sliders or color pickers. Again, this is how the surfaces tab we're all familiar with operates; it's just presented in a different way.

So when a texture or value is adjusted in the shader tab/User Parameters, it goes into these texture instances via the Image slot. The User Parameters node also exposes the Tiling/Offset controls that adjust how a texture tiles. It routes that coordinate information into a Surface Tiling node, and from there it blooms outward like a beautiful, confusing flower, connecting to each and every texture instance node, so they all share the same tiling information. The "S (RSL)" and "T (RSL)" sockets on the Texture Instance nodes seem to do the same thing, except for 3Delight.

From there, most of the texture instance nodes plug into the PBR Metallicity Base node and combine to create the shader, as outlined in my previous posts. But unlike Blender's Principled BSDF, the PBR Metallicity Base doesn't seem to have an alpha channel. Instead, it needs to be connected to an Uber Add Geometry node, which apparently allows you to bundle a shader with transparency, additional bump, and displacement data from other texture maps. This is where the Rounded Corners option, which lets you fake beveling, is applied to the shader.

The shader data then travels from the Uber Add Geometry node to the Uber Add Volume node, which applies the SubSurface Scattering information, and finally it reaches its destination, the MDL Surface node, where it's transformed from a chaotic mess of nodes and a lurid rainbow of connector wires into beautiful, beautiful colors on your screen.

After this deep dive, it seems to me the complexity of the Shader Mixer is mainly due to the fact that so much indirection is required to expose it via the User Parameters node. Most of the values aren't even used; they're just taking up space on the off-chance the user would like to adjust it from the surfaces tab. And the fact that they all need to be connected to one Surface Tiling node makes it seem more byzantine than it actually is. If you implmented a shader directly in the shader editor without the User Parameters tab, ignored all the nodes you didn't need, and weren't required to tile it (i.e. it's UV mapped), I think the shader editor would be much more disgestable to wrap your head around.

Sorry for the mixed metaphors, but I've been tracing node connectors all morning. I'm a bit dizzy.

Direct link to this document results in a File Not Found. Not sure if any of these are what you wanted us to see, but they are interesting.

I found this pdf intro to MDL that defines terms.

https://developer.download.nvidia.com/video/gputechconf/gtc/2019/presentation/s9908-real-time-ray-tracing-with-mdl-materials-v2.pdf

This page provides a technical introduction with examples, but it doesn't show bricks as seen in DS.

https://raytracing-docs.nvidia.com/iray/mdl/introduction/index.html#mdl_introduction#background

This page has links to the MDL handbook in pdf format.

https://mdlhandbook.com/

Thanks, I changed the PDF to an HTML version of the same document.

Excellent!

It's still not super useful, because it doesn't explain anything about Daz's implementation of MDL, but as far as documentation goes it's better than having none at all--which is Daz's typical approach.

If you perused the MDL documentation I linked to above, you would've seen the section on elemental BSDFs. A Bidirectional Scattering Distribution Function is the technical term for an algorithm that determines how light bounces off an object. Photoreal renderers like Iray try to emulate that as closely as possible, so they have different BSDF algorithms that govern dull surfaces, glossy surfaces, transparent surfaces, etc. Under the Functions -> MDL -> Default Modules -> df header, there are a bunch of BSDF nodes, many of which correspond to the MDL documentation. However, all of them output "BF" data, and I had no clue how to convert that into the "MT" data the MDL Surface expects.

But thanks to this post by @soup-sammich I figured it out.

You take the BSDF node (a scattering function) and plug it into the "Scattering" slot of a Material Surface node (Functions -> MDL -> Built Ins -> Types), then plug the "ST" data--which stands for "struct", I think--into the "Surface" socket of a "Material - Structs" node (Functions -> MDL -> Materials).

Alternatively, you can create a "Material - Fields" node (Functions -> MDL -> Materials) and plug the BSDF directly into the "Surface Scattering" socket. The difference seems to be largely organizational; the first way, you define the scattering and shading data in a seperate node, while the second way you just plug it all directly into the material node.

Based on this, I've deduced the Materials section has three tiers:

You need at least one of these material nodes connected into your MDL Surface for it to do anything. I assume there's a way to combine them a la Blender's Mix Shader, but I haven't found it yet.

It doesn't look like the MDL specs define any sort of principled or uber shader. They seem to mainly deal with the mid-tier materials, defining your own BSDFs by routing them into a Material - Struct or Material - Field node. It appears the Uber shader is a custom Daz3D creation, which means it can't be exported for cross-application functionality unless you can break it down into its MDL components.

Great information here. Thanks for posting.

From the official MDL specs by Nvidia, page 73.

As I suspected, the Material - Structs and Material - Fields nodes are direct implementations of MDL functionality. So are the four nodes available under Functions -> MDL -> Built Ins -> Types, Material Surface, Material Emission, Material Geometry, and Material Volume. They're all detailed a few pages later, with the same parameters they have in the Shader Mixer. So it seems like these nodes are just wrappers around the classes Iray actually uses when rendering.

On page 93, it outlines MDL's Standard Modules. Four of them, df, math, state, and tex, have headers in the Shader Mixer as well. Doesn't seem like the fifth, base, has an equivalent. I searched the specs for some of the nodes in the Shader Mixer, and none came up. Still, this is more documentation than Daz has ever given us.

No problem. Writing it all down helps me keep track of it and remember it too.

I found the documentation of the base module. It's here: https://raytracing-docs.nvidia.com/mdl/base_module/index.html#base#

Despite my earlier complaints about the User Parameters node leading to bloated shaders, I've since discovered it's actually quite useful.

Mainly because the alternative is creating dozens of Direct Value nodes.

Unlike Blender's Principled BSDF, you can't set values on the material directly. They must be fed in from somewhere else, and the User Parameters node is marginally better than filling your shader with one-off float nodes. To use it, just plug an arbitrary datatype connector into the "(V)ariant" socket and a slider will automatically be created.

Here's a simple shader to turn a cube primtitive into volumetric fog. The Volume Scattering slider controls how thick it is, and the IOR slider softens the edges so it doesn't look like a glass cube.

Godrays.

Tonemapper's set to a Shutter Speed of 1, an f-Stop of 8, and an ISO of 800. Render Settings are set to "Scene Only", with a single yellowish Distant Light outside at 200,000 lumens. The fog shader was applied to a single primitive cube scaled up so it envelops the entire attic room.

I gave Nvidia's vMaterials library a go. It's a massive MDL shader library advertised as having over 2,000 shaders. I haven't looked too closely at the license, so I don't know if you can actually use it in your renders or if it's just to compare settings between different renderers, but I was mainly interested in the technical side of things so for my purposes it didn't matter.

EDIT: Per an Nvidia mod in this thread (vMaterials 1.6 released - Advanced Graphics / vMaterials - NVIDIA Developer Forums), "The MDL catalog is provided free of charge and derived work may be re-distributed for free if it follows the license." So I assume we're allowed to use it in our renders.

Using MDL shaders requires a bit of setup, though.

- per Richard Haseltine

Once you install vMaterials, you need to find the folder (mine for some baffling reason defaulted to Documents) and set that as the content directory, per Richard's instructions. Then, you can either drag and drop an MDL file into the Shader Mixer, or make a blank Custom MDL node and put the path to the file (relative to the content directory's root) into the MDL Callable field. The MDL Callable field also requires (I assume) that you append the pound sign and the name of the material in the file you want to use. The file is just plaintext, you can open it in Notepad. In my case, it was "export material carpet_circle_brown". After that wasa list of variables the Custom MDL node creates when a file is loaded, followed by a gigantic constructor for something called a "flex material", which is probably how the material is actually created inside Iray.

The Custom MDL acts just like a PBR Metallicity Base node, so you can plug it right into the MDL Surface socket.

Another forum user asked how to create a scene where the camera is above and below the waterline at the same time.

I took a look through the vMaterials library and figured I'd share what I came up with.

This is using "indoor_pool.mdl". The "Clarity" parameter seems to control the absorbtion, like Blender's Volume Absorbtion node.

For an actual render, you'd probably want to make the cube much bigger so you can't see the backfaces. You'd also probably want to round off the hard edges, especially since you can see the backfacing edges right through the cube, but I haven't figured out how to do that. The functionality is there (it's part of the Uber shader), I just need to work out how to implement it.

More experiments with vMaterials water.

The ripples are pretty much the same size as the cube in my last post, since they're mapped to the shape of the cube. They look stretched out and pixelated, but they're just an ordinary bump map stored in the vMaterials folder; ideally, you'd use the "Scale" socket to make it bigger or smaller, but I haven't figured that part out yet. I tried to add some fog too, but working out the relationship between the size of the cube and the various settings is too much for me this late in the day.

Fortunately, opening the MDL file in a text editor reveals all the variables are explained via annotations. Daz Studio just didn't implement that for some reason.

I will say there seems to be a worrying lack of range in these variables. Apparently Iray only uses 32-bit float variables instead of 64-bit doubles, which makes it faster but less precise. That leads to wonderful problems like the black eyes bug if your characters are too far from world origin.

I don't have anything pertinent to add, but I wanted to pipe up and say I read threads like this all the time. They are useful and interesting to read. The forums needs a like button, so you can see how many people actually read these, but feel like too much of a dumbass to attempt to contribute lol.

Don't worry about it. Just give it a go and if you find something interesting, post it.

After studying the Uber shader again, I figured out how to fake beveling with Functions -> MDL -> Default Modules -> state -> Rounded Corner Normal. It works much the same as Blender's own fake beveling node. Of course it's no match for genuine beveling in a modelling program, but in a pinch it can give pretty good results. All of its values are exposed in the Uber shader already, so there's no need to add it to something you bought from the store.

I'm still not sure how to mix it with a Custom MDL material yet. The post by soup-sammich mentioned the Functions -> MDL -> Material Editors -> Override Material Field node, so I tried applying it to the vMaterials water shader from my previous post. It worked, but I'm not sure if this is the best way to do it.

one thing maybe worth mentioning: the blender thing MDL itself has most in common with isn't so much the cycles nodes as it is OSL..the "L" in both stands for the same thing - language. Tha'ts why the documentation for mdl doesn't really match anything you see in the shader mixer as its more the specifications for coding shaders not connecting bricks.

all the bricks are created by daz: bits of code to the mdl specification exactly the same as if you pulled in a custom mdl - just already loaded into the program and organized in a library (though I couldn't tell you the logic of said organization)

Fun? fact. The Iray uber shader has some elements to it that are in some way coded bypassing the shader mixer - which is why importing it in will break it a bit anything that was hidden will not be loaded in although that also means that as big as the uber shader looks in the shader mixer its actually muuuch larger given that you have "metalicity/roughness" "glossiness" and "weighted" and can only load in one of those at a time...

this is pretty fundamentally different to Cycles where the bricks themselves are the lowest level other than editing the whole rendering engine

The pbr shader incidentally doesn't have this problem its parameters you hide are hiden via a node in the shader mixer that was added since the uber shader was made

Yeah, that's what I figured. The Shader Mixer is a wrapper around the MDL library, and the Uber shaders have some Uber Hax to do what Daz wants. That's why I'm staying away from it for now, lol. I want to get a feel for MDL itself before I deal with the Daz-specific stuff.

Unfortunately, I couldn't tell you the logic of the organization either, which is the main problem.

also on the subject of transferring blender knowledge to DS. As I was poking around in the Shader mixer, I noticed that some IOR inuts had "C" rather than "F" - ie it took a color input. "Hmmm..." I think to myself "I know in blender you can do fun dispersion things by combining red, blue, and green glass shaders and giving each slightly different IORs, Is this just cutting out the middle man and letting you have different IORs in the R, G, and B, channels?"

It is. It is letting you do that.

I also threw on a thin film, which is doing the green on the edges

That's really cool. What's the node setup look like? How are you combining the shaders?

There are a lot of BDSF nodes. They have an output labled "BF". Now the final output you need has an input "MT" so you have to figure out how to transform that BF output to the MT imput. As far as I can tell, you have to first convert it to an "ST" I think there is one node in aaaalll of the nodes that can do this? and its not labled "hey, If you want to be able to ever use a BDSF node ever you will need this" or put anywhere I will ever remember. You then take that ST output and there are 2 nodes to choose from that take those and output an MT.

In canse anyone is wondering the node names I used to go from BF to MT were "Material Surface" and "Material Structs". "Material Structs"is under materials which makes sense. I don't remember where "Material Surface" is and dont feel like spending 5 minutse going through every category to find it.

If the brickyard had a search bar or you could sort by input and output type it would immediately become 100% more usable

Here you go

Theres no mapping currently which makes it simpler

also note the fun color values for the IOR, because its not really a color as much as 3 values real colors are incorrect as they lead to an IOR of less than 1.

also I added an HDR and funner model

edit:

bonus - as you spread the IOR it gets real funky - probably not all that physically accurate at this point, but super nifty