Adding to Cart…

Licensing Agreement | Terms of Service | Privacy Policy | EULA

© 2025 Daz Productions Inc. All Rights Reserved.You currently have no notifications.

Licensing Agreement | Terms of Service | Privacy Policy | EULA

© 2025 Daz Productions Inc. All Rights Reserved.

Comments

Good example, Wolf!

These tools do bring a lot of the animation features of a full modeling program. It's good you mentioned them as they may be the best solution for many people here considering animation. For anyone considering this, it helps to know that the functions and methods in using these tools follow very closely to the functions and methods one would use in other 3D packages (i.e. dope sheets, curve editors, timeline, etc..) so anything learned using one tool is not a waste as the base concepts apply across almost all modern animation tools.

The issue isn't so much the tool one chooses as it is recognizing that animation is still challenging, both in it's resource usage and in the learning curve. As to the difference between using these tools vs any external method (Maya, Blender, etc...) it's a combination of many factors that end up deciding what will work. Having tools that work inside DAZ without having to go out at all is a big plus for anyone who that is the main issue, and for that reason it should have been mentioned as an option. For my part, I simply forgot to mention it as an option.

There is one thing though that one can't get as of now in DAZ vs importing into Blender or for that matter a game engine is near real time rendering (Eevee, not Cycles.) Of course if one is throwing complex things like hair, particle systems, physics, etc... at the render, it's going to bog down to not much faster then a raytracing engine (Cycles, iRay, etc...) Also, Eevee is brand new and has it's limitations. I do agree that the addons are well worth considering.

One thing I enjoyed playing with a while ago that I still haven't seen in other environments is using puppeteer for animation. It's an interesting and unique interface. It's been a long time since I've played with it so I don't know if it still works.

When one is building character motion realtime rendeing is not required

only realtime viewport feedback.

again this is an area where intelligent use of the tools can be useful

even in Daz studio.

I would imagine that even today, many people pick thier favorite Genesis figure

wearing thier favorite outfit(consisting of several conformers)

and try to animate all of this and become frustrated and give up

when the Daz viewport bogs down.

This is why one need to use the lowest resolution proxy figure during motionbuilding

and save the major body motion as an animated pose file

and apply it to the Hi-res fully dressed figure after tweaking

then layer on your lip sinc and finger animation etc.

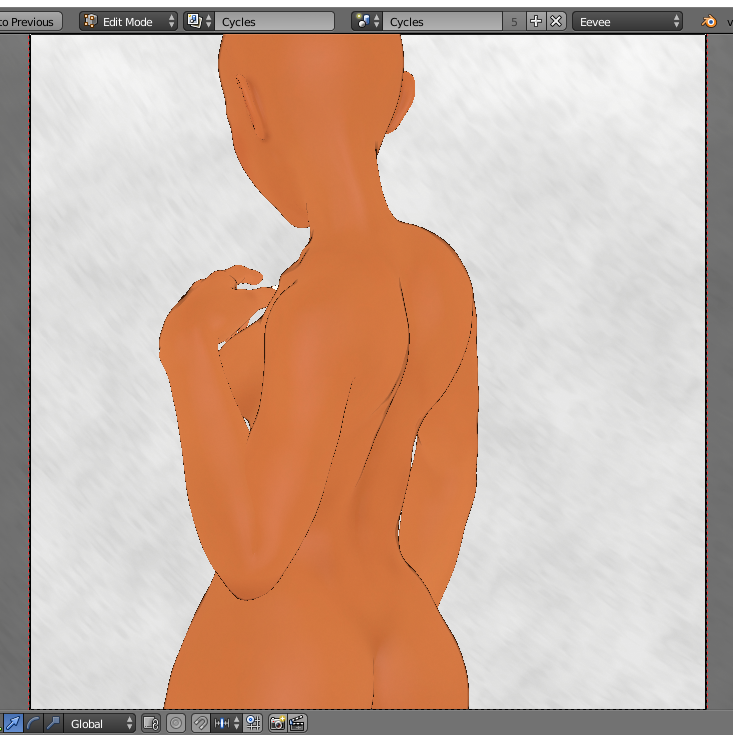

I created my own super low res proxy animation figure rigged with the transfer utility

for G2

It is made from a copy of my content development base mesh

that I have for very genesis figure including Genesis 8 (See pic)

I recommed the every Daz studio animator build themself one

for use as a realtime motion building dummy in Daz studio.

Also yes puppeteer still works I use it in my pipeline as well.

The thing is, people who have been doing animation for a while have come to terms with the concept of offline rendering and the associated costs. For many potential new artists, they won't join in until they can have something closer to real time. It's not a +/- thing, it's just where the comfort level is/will be for many artists in cost vs return. The same really goes for the learning curve for all of the different components required. When the level of animation crosses a certain minimum for a given artist at a given amount of effort to get it, that's when they will jump in and be able to get creative in the media. In the end, for me, it's about getting the tools, learning and effort required into the hands of more artists so they can create and feel fulfilled. But this is a moving target. Luckily, it's moving in the right direction. I would consider doing aniblocks for the DAZ store to help out artists but I'm afraid the render time is a killer for too many at the moment.

Well in light of that Marble, yes it can be. Considering Rigify and the new principled BSDF shader make getting the assets transferred with more fidelity at less effort then if you are interested in playing with it, it can be well worth the time. Point is, try it and see, you have nothing to loose. What it costs you in time you gain in experience. :)

@wolf359 Forgot to say thank you for posting not only well thought out posts but including animations that demonstrated the information you were presenting. I always appreciate when people take the time to do that. :)

Personally I'd just skip the bounce and say she was wearing a sports bra... Or if shes just walking, ya know, pretty much any bra. ;)

@marble Well there is this (semi nsfw, but not really...)

Now we are talking ;)

Do you think DAZ would include tutorials like that when they release some kind of physics engine?

There are a good number of people, PAs and otherwise that release tutorials for DAZ Studio so I'm thinking someone would.

You are welcome sir

For those interested Poser has had soft body dynamics

since poser pro 2014.

They frequently have sales

and I beleive there is a free utility to get G3-G8 into poser

where one could use the soft body tools for whatever purpose

You convinced me to take a look at Blender 2.8, Joe. Color me impressed with Eevee's Cycles fall back.

That, right there, is my cycles toonshader, rendered by Eevee.

Rendered instantly by Eevee. Add some kind of anti-aliasing, and it would be about perfect.

Not correct, mind. It's not reacting to light, at all. But as a realtime preview of WIP, it's pretty incredible. For a CPU render, running in real time, with minimal UI delay.

Let me repeat. This is being done by the CPU. It's a freaking Viewport display.

Hell, this could be whole new option for doing NPR flat colors, sans the jaggies. And since the jaggies are an artifact of the contour generation, all it wold take to work, in theory, is turning the lines off.

Unfortunately, Eevee doesn't yet do transparency, and Cycles fallback doesn't talk to Eeevee native. (Results in something like a Holdout BSDF if you try). But man, that's sweet for beta software, right there.

Since the topic of animation has been front and center some here might find Pixar in a Box interesting.

I've been meaning to do that, even though I don't animate. It looks like a good overview of sequential story telling.

Blender is all about the shortcut keys. Get them down, and you'll have a much easier time.

If you're going through the interface, yeah, it can be a heck of a mountain to climb to get used to everything. Funny thing is, it's far better now than it was back before 2.0. The older versions of it, before it went Open Source, was even weirder to work with.

I forgot to comment on this. This is an excellent example. For anyone who's done glass with a roughness value they will know that roughness adds a lot (as in multiplicitive amount sometimes) of time to rendering.

For anyone who hasn't seen yet Blender's New "Ultimate" Shader: The Principled BSDF coming in Blender 2.79 (available now in the Beta build,) Andrew Price has done an excellent quick tutorial on how/why it's revolutionary. For most shading tasks it greatly simplifies what's required. One no longer really has to learn much about shaders or nodes to grasp how to use it. What it doesn't do is totally render nodes obsolete (yet at least) as cheats like J Cade posted above still require using specialized nodes like the light path node and understanding somewhat involved node networks, but this will mostly be for specific use/case situations it looks like. Combine this with Filmic (which Andrew Price also has some videos on and is also coming in 2.79) and we have quite a step up in quality for less effort then ever before in Blender.

Something to think about on all of this discussion about shaders, re-rigging in Blender, etc... As has been kind of hinted at, all of this can be done in DAZ Studio itself now to some extent. Not only that but shaders available at the store for a very good price here still take collecting, putting together etc.. .in another environment like Blender (or any outside 3D tool.) One doesn't go to an outside tool like Blender to save money as the cost here at DAZ is so low compared to the hours required in another tool it doesn't make sense. The only reason to go to an outside tool is for more control. And that isn't free. Even if one isn't paying for the software, one is paying in time, and at some point many people get paid add-ons etc.. for Blender because using any tool to do advanced things makes one really appreciate the cost of one's time. Also, using a tool like Blender doesn't preclude using DAZ tools also. For most people who have DAZ and Cycles I'm guessing both tools are used depending on the situation/circumstances. It's not an either/or as some look at it, but rather expanded options. If one is looking at it as an either or, then the best solution would most likely be to stay inside the DAZ world, but, if one decides to expand one's horizons, there are some gems out there. For instance, if one learns to re-rig, one can come up with an advanced rig to do things such as animate facial features with a dragable interface, make it easier to do complicated animations or have a character move in a stylized (cartoon) type of movement (Blender's bendy bones.) That type of thing takes a considerable amount of time and study though, but if one likes doing that type of thing, it can be quite rewarding. If not, wait a few years and the whole process will be simplified and probably with Victoria 10 there will be that shiny new dream rig. :)

Has anyone seen UV unwrapping gets an overhaul from the Blender Foundation? I know people really love taking the time to manually UV unwrap their model, bit by bit and this could cut down on the time one is able to enjoy this pastime, but it could be a pretty big step forward. One thing of note though is how the model being used has some pretty uniform triangulation. I would like to see it used on a mesh with varying size polygons. I haven't seen anything like this in any other 3D tool so far.

I've seen some discussion on how Uber Shaders, especially well done ones like Blender's new Principled BSDF shader pretty much make obsolete having to understand nodes but besides custom situations like mentioned previously, there are times when we want to combine textures to go into a single input (similar to DAZ's LIE system's function) and for that we still end up going back to setting up node trees. Andrew Price demonstrates that extremely well in his tutorial on How to use Surface Imperfections in Blender 2.79. Note how setting up a much more complicated node tree is greately simplified by feeding into the Principled BSDF node so that the only extra node work is easy to understand and implement with the heavy lifting done with the PBSDF shader. Btw, while there, check out the node group for combining normal maps. (Download link in the description of the video.) :)

I watched that Blender video on the new UV mapping, and honestly it has me scratching my head...

It seems to be directed at those who don't want to manually decide how the map should be. And as a result you get a map that may or may not match the image/texture/whatever you're using to map the object. And almost by deifinition, the result will be a tradeoff, and not nearly as good as doing it yourself. Better than the previous methods, but still not the best. I mean, his example was a moose-looking thing, and he made a "head on" UV map which results in the sides of the face being totally scrunched and low detail.

Heck, the time it takes to run a single edge loop for a seam to make the map far more useful seems like a small price to pay for the benefit.

Anyway...

Hey! Easy there! You just blew out all the warning lights on my sarcasm detector.

While the majority of my UV work has been in the method, "unwrapping a mesh to fit to an existing texture," the vast majority of UVmapping work goes the other way. Creat textures to fit an existing UV.

Given Blender's already decent UV tools, I can see one profiting from this method. Oddly, what you said about the sides of the is exactly the opposite. While the area per unit here isn't super high, the unwrap does a really good job given the constraints. But I think you mistake the intent. The speaker is using a poorly "seamed" unwrap to prove how really good SLIM is. Also, the ability to "in paint" areas SLIM should prefer means if you, personally, wanted to give more area to the sides, you could tell SLIM to do that, and it would.

The idea is you add this technology to your existing UV unwrap workflow. That is, rather than replacing the basic unwrap step, SLIM would replace the minimize stretch command. This means you can still arrange UV islands as you think best, and then have SLIM reduce the distortion. And the results were really, really good. Me want.

What I was saying is that the actual triangles are of uniform size on the mesh whereas most meshes are of varying size to keep poly count down. As to the poorly seamed.. it's not seamed at all. There were no seams in the mesh, which is in itself amazing. I'm guessing that one would still use seams, but perhaps not as many, and yes the results are really good. I was more trying to reign it in a little so that people don't get over optimistic before playing with it in real world situations.

I have installed and uninstalled Blender about 3 times. I can't get it to do anything, even with a shotgun pointed at my screen. Wings had me designing without ever viewing a tutorial.

Singular Blues,

Yeah, you have a point...no doubt it's better than previous methods, so I can't complain. I'm just trying to see how much benefit there really is. Y'know, compared to stuff they could have spent their time on

And personally, I tend to do a lot of manual UV mapping so right off the bat I'm not sure the benefit. But I suppose for those who are doing stuff like character heads/faces and have limited images to map it's probably a big improvement. It would be interesting to see where people really benefit. I mean, a front view photo of a human head is going to be limited on the side of the face no matter what you do.

And BTW, I'm surprised by your point about the vast majority of UV mapping is to generate a texture/image to fit a UV, not the other way around. Seems strange that in a production environment that's the case. I mean, take a human character (Tom Cruise, for example). If they make a 3D stand-in they still have to spend big bucks to get him in to take fotographs and have a crew available and so on, right? But editing and tweaking UV maps by a low paid 3D artist seems a relatively quick and easy process.

I dunno, just guessing. But at least it seems that would be the case.

privatesugarplum,

I feel your pain. I've used Blender for many years, but it's painful. Especially if you jump back and forth to other 3D apps, and try to remember the keyboard shortcuts and work procedures.

I'm convinced that the technical skills needed to make awesome 3D applications are totally different from the skills needed to design really excellent user interfaces for thos apps

This is only what I've gathered in "fly in the wall mode" and from seeing any number of given special FX commentaries. IANAPDA. It's unlikely anyone takes "photo" references for actors in the Tom Cruise category. These days they do 360 degree scans. My guess is they would use something similar to Blender's texture bake to project the resulting image maps to the final model and then do manual clean up of the textures. It's really going to depend on the FX house. Disney, for example, doesn't use anything like UV as we know it. What they do use requires dense geometry in ways I don't understand, but I don't have to. They've got the money to run the server farms required to deal with the render time penaties that method creates.

I'm pretty sure Weta Digital (Lord of the Rings, et al) uses 360 scanning, but all I have there is fragements about their capture process (which starts with a 360 degree capture dome, and people acting while standing very still, and then moves to a more open mocap stage. I infer the 360 rig is for capturing the face in high detail and getting kinematics on the way the actor emotes, while the mocap is for more full body, gross movement. Also, based on similar fragments, I know Weta can capture a significant amount of mocap data on the regular stage, though I don't know how much they use vs mocap stage. But I digress).

But the vast majority of this kind of work is not in the Movie FX houses. Again, movie houses are so tech forward about what they do, they don't all use UV mapping. I'd say the majority of UV mapping is in the games industry, and there they are largely unwrapping assests and texture painting them, so yeah. The workflow is overwhelmingly UV first, image next. Even a given photo reference workflow tends to be mostly for capturing information to be remapped to a different UV set. Which, if you have a tool similar to FaceGen, means the mapping is automatic. FaceGen takes an arbitrary set of photo references and projects them onto an existing UV. And with the Daz figure support, it can reproject those data to existing Daz UVs. Given how creating a UV map is clearly the kind of work you can automate, my guess is that the film production world automates the crap out of it, to the extent they can afford to. This is probably not the case for indie houses. I wouldn't try to hazard a guess at, say, Rocket Jump's workflow (Though it's likely Freddie et al have some behind the scenes stuff on youtube. I've never watched it. I'm not sure they do much with 3D figures either. They seem more after effects focused. Seems like they use 3D for objects more than people, but they're in the right business for it, so whatever.)

Actually no. If you look at the heat maps generated they are much better then typical hand done uvs. Part of the point of this video is that it can theoretically do much better uv maps then you could do by hand without spending an unfeasible amount of time on the maps. I am interested in seeing it in action on a wide range of objects but if the demo proves correct then it's not a matter of saving 'a little' time but rather doing something you flat out can't do by hand unless you want to spend a lifetime doing a single character. I guess we'll see.

...