Algovincian Non-Photorealistic Rendering (NPR) 2019/2020

algovincian

Posts: 2,636

algovincian

Posts: 2,636

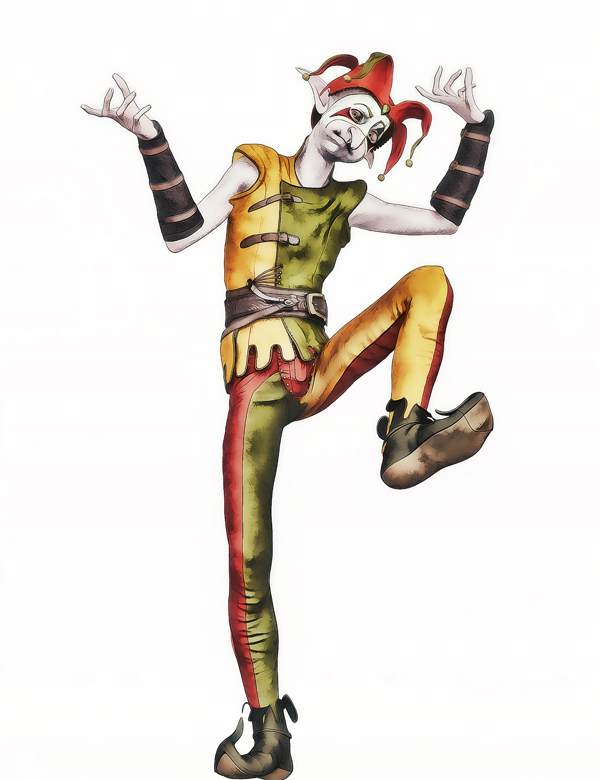

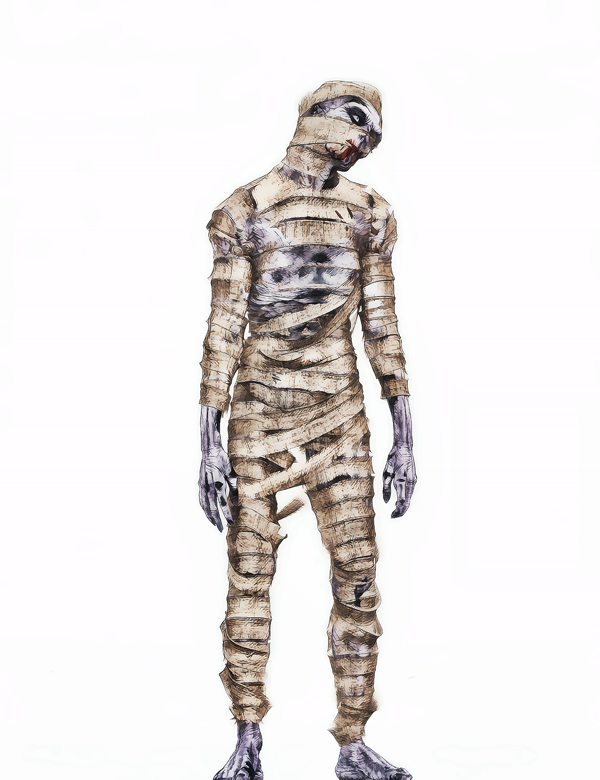

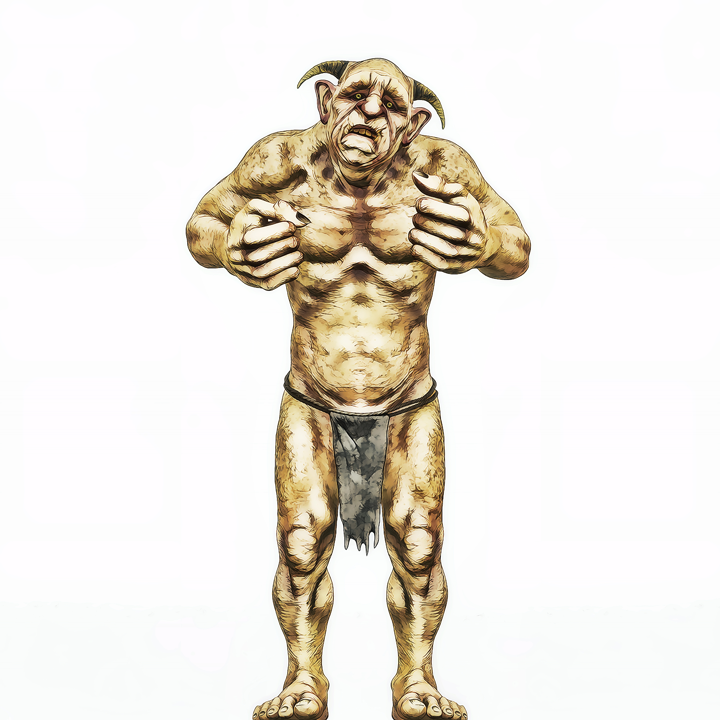

Been a while since I've had an active thread here, so figured it was time to post some images and talk a little shop. The main focus lately has been a new smoothing algorithm. Unfortunately, it adds 2-3 min to the processing time of each image, but the results allow the linework to remain prominent, and it scales well (which was another major goal). Shadows were also treated differently for this batch, and the changes seem to have helped with some problematic shading in certain areas.

Total processing time of the analysis passes is around 32 min per scene now, which includes saving out a ton of intermediate files and numerous finished styles in addition to this full color one. This speeds future development, but is a significant chunk of the processing time. Anyway, here's the full-color output from last night's batch of 13 (no editing except resizing):

After looking at these all together, while I have no glaring objections to any of the output in this batch, I do think that overall they are a touch over-saturated for my tastes - will have to look at them on more devices other than the 2 monitors here and my phone.

Getting there - feels closer than ever to something finished . . .

- Greg

Comments

Your stuff always looks awesome

Reminds me of watercolor with pencil outlines style. Awesome!

Great looking NPR images.

Since I have no others options at the moment, I experiment with blending layers of different filters from Topaz Studio.

Not that great as yours, but it keeps me busy.

Thanks for taking the time to take a look and comment @Wendy_Carrara and @Vyusur - much appreciated! One of the things I struggle with is coming up with a style that satisfies a bunch of constraints, such as:

- the output looks good in both print and on digital displays

- the output scales well so it looks good on everything from a phone to a 65" 4K TV

- the algorithms work in the general case (meaning a wide variety of 3D content can be rendered)

And, of course, the style must be something that the algorithms can create in an automated fashion without any human intervention. This last constraint is what will make rendering animation feasible (it's coming).

- Greg

I'm looking forward to seeing more stuff from you. It's always a pleasure to see you do new renders. They are always outstanding and sometimes actually draw dropping. You have a style all your own that's a pleasure to watch as it develops even more. :)

These look fantabulous. I don't think they are oversaturated, but oversaturated is better than under because I think color/saturation corrections are easy in post work. I do realize you are trying to avoid the necessity of postwork though.

Edit Wrong thread

Thanks, @Artini. I’ve never used Topaz Studio. Haven’t you also rendered DAZ assets in Unity? Or am I thinking of somebody else?

- Greg

Ahhhhhh YOU'RE BACK! This makes me very happy! These renders are a joy to look at and I'm looking forward to seeing more with this style!

The saturation really only bothers me on my phone, but not as much on monitors. And even on the phone, it's mosly the skin that bugs me. Thanks for chiming in on that, and thanks for stopping by you two - it's much appreciated. Going to try to force myself to post on a fairly regular basis . . . we'll see how that goes! lol

- Greg

Yes, Unity is another thing, I visit pretty often. I am trying to get the best of the 2 worlds: Daz 3D and Unity.

Thanks, Diva - move-in day is supposedly Tuesday, so things will be a bit hectic for a while, but once they settle down there will be a lot of rendering going on!

- Greg

Not sure what happened with your comment, @Headwax_Carrara, but creating a way to treat eyes differently has been something I've been working on for a while now. Many components of my automated workflow are "black boxed", meaning that they can be individually developed without effecting the process overall. When a new version of a component is updated, it's simply substituted in to replace the old version - inserted into the starting line-up when ready if you will.

One such component that's being developed actively now is the generation of the depth pass and how it's utilized. My algorithms basically use the z-depth pass to "focus" on different parts of an image by drawing them differently. What I've been working on is a rig within DS that allows me some artistic license when it comes to defining exactly where, and how much, focus certain areas get (above and beyond being based on depth). Eyes are a good example of where this will be used.

I totally hear what you were saying about eyes, but was waiting to reply to your comment as I was hoping to have some images to show that illustrate the concept, but time has been hard to find. I'll post again on this as soon as I can.

One other thing that I'd like to mention is that eyes have given me trouble because scaling line art is always tricky. This is a topic which requires/deserves much more attention than I can afford to give it right now, but I'll come back to it.

- Greg

So I really shouldn't be spending any time on this stuff today, but a completely different approach in a batch run last night has yielded some interesting results:

There is a lot of work to be done on this new approach as it does not work in the general case. And there are obviously issues with some of the shading in this image (mostly I would like for there to be no shading on the skin), but it's promising!

Must focus and stop playing with this now . . .

- Greg

Oh that's really cool! It looks almost like a Copic marker drawing! Awesome!

Thanks, Diva - couldn't help myself and kept working a bit on it today:

I think the shading is ok (maybe not ideal), but the eyes definitely still need work. The process I was describing a couple of posts back in response to @Headwax_Carrara's comments should help with that, though. Pretty psyched with the results!

- Greg

That's excellent, Greg! I can totally envision a comic book in that style! :D

As for the eyes, maybe darkening them would help give the algos something a bit more to work with? *shrug* I'm just making wild suggestions since I don't have a clue how your algos work. lol I just know I'm a big fan of them and the styles of art you've been able to create! :D

Will have to go back and have a look at her eye color in the analysis passes, but you're probably right. The algos may still have trouble drawing a dark circle that small in size, though. They're trying to find lines to draw - not filled in shapes if that makes sense. Like you suggested, they do "try harder" to draw lines in darker areas than lighter areas. In general, it may be nice to be able to render lighter color eyes, but for this style it doesn't matter.

This new style utilizes neural nets to perform a style transfer, which is very popular these days. This is a completely different approach to how the existing algos I've written function. I've looked at this approach in the past (deepart.io was the first I came across years ago), but found it to be hit or miss - definitely not practical for the general case (wide variety of subject matter, scale, etc.).

Since I first played with them years ago, I've come up with a few tweaks of the process in an attempt to address this issue. Rather than starting with a 3D render + a reference style image as the inputs, I started with the output from my algos + a reference style image. This seems to help dramatically, presumably because lines have been drawn already by my algos. I also believe it helps that the input is already skewed towards dark lines with lighter shading (as opposed to a more even distribution like you'd see in a 3D render or photograph).

There is also another added benefit of starting with the output from my algos as the input to the neural nets. It gives me access to all of the analysis passes used, as well as every intermediate image, derivative mask and finished style. It was through using these that the shading on the skin was quickly isolated and changed to something more apprpriate in an automated fashion.

Unfortunately, at the moment I doubt there is any hope of running a batch of any size and getting acceptable results for all cases . . . doh! At least it is now officially in the pipeline, though.

- Greg

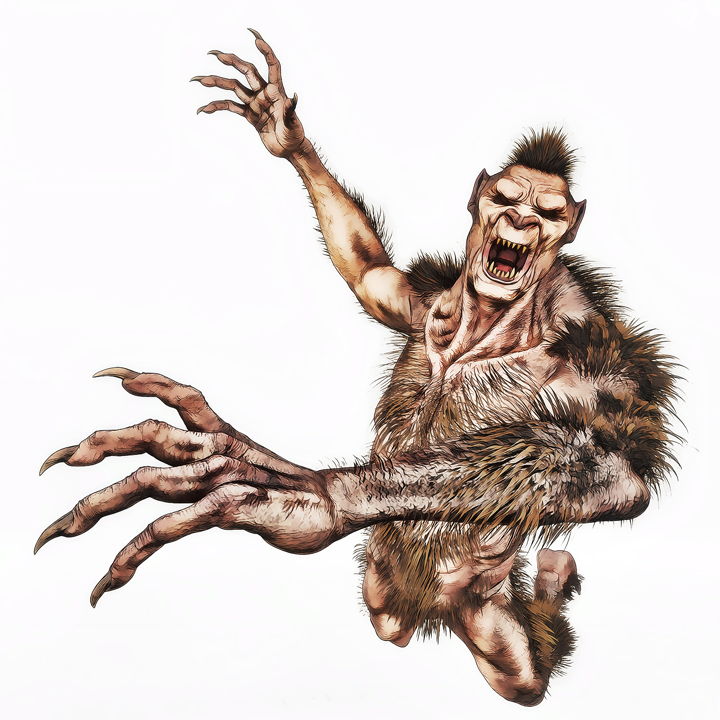

Further work on the new approach:

The thickness of the lines is better in this style. Several adjustments have been made aimed at producing consistent output for the general case. Definitely not looking CGI, which to the rest of the world is a good thing.

- Greg

That looks incredible! As you say, nothing to indicate CGI at all... wow!

Looks terrific as 2D art without hinting at 3D.

Excellent work, Greg! :D

I think the eyes are the only "give away". I really love this style! The different styles you've been able to produce with your algos is mind-blowing!

Keep 'em coming! We love seeing what you're cooking up! :D

Nice work, love these different styles. Apologies from before ...I was having bad day . That's the trouble with being a human

Absolutely no need to apologize. I value everyone's thoughts and comments no matter what, and especially yours since you've got so much experience with NPR.

Eyes have always been something I've found challenging. Using our troll friend from the first post as a concrete example, his iris is only about 5 or 6 pixels in diameter. Where the algorithms draw the line will make a huge difference (centered on the boundary between iris/cornea, as opposed to a literal outline of the iris). A 1 or 2 pixel swing in the size of the iris is huge!

And when you also consider the fact that the lines are in general dark, the equation gets even more complex . . .

- Greg

Hi Greg, do you have access to normal passes in Studio? You can grab outlines from them - might be handy for the corneas etc?

A normal map shader was one of the first that I authored way back when, but currently it's not one of the render passes used as input. I believe the fresnel pass (which is currently being rendered) pretty much provides the same information a tangential normal map would provide, though.

That being said - not really sure how to ultimately render eyes. My preference is to have everything automated, and even if automated, not define special case handling for eyes, or anything else for that matter. In general, I find that outlining can soften/bloat things, and plays a significant role in the filtered NPR look that is so frowned upon.

It should be noted that the examples I've posted start with the default Iray or 3DL materials (like in the case @Divamakeup pointed out). Creating eye materials made specifically for the algos and applying them before generating the analysis passes is an option that may help. Like the other special case carve-outs, my preference is to avoid this if possible, though.

- Greg

ETA: Shouldn't have said default here - what I meant was the mats that come with the products.

wow amazing how far you are getting under the bonnet

I just work on carrara render passes in post and that is what I have noticed about the normal map

very long shot - maybe you could do facial recognition that identifies the eyes and treats them differently?

I could definitely render out another mask pass for eyes and then use it in processing, but my preference would be to use a more general tool.

One such tool is already is development - it's basically an asset for DS that allows for one or more billboards to be positioned in front of the camera and rendered only for the z-depth pass. Each billboard can be morphed, positioned, and keyframed. They basically allow me to define areas to "focus" on (draw differently in the various styles). It's done while setting up the scene and maintains the possibility of animation with ability to keyframe.

Here's a concrete example showing the z-depth pass with and without the billboards focusing on the wolf's eyes:

The image on the left is just the straight z-depth. You can see in the processed image below that portions of the wolf closer to the camera are drawn differently than those far away. The image on the right shows the billboards contribution, which causes the algos to focus even more on the eyes:

I could do the same for the human figure's eyes, or the bird's eyes, or anthing else in the scene for that matter (doesn't have to be eyes).

- Greg

Just discovered this thread. Very beautiful works! Can't even guess that they were renders initially.

I don't fully understand though. You use.. daz studio with several npr rendering tools, is that right? While most of your models are custom zbrush/etc. done? And you don't "texture", instead you do details and colours through your (npr) rendering passes?