No need to argue Daz Iray is good for main characters in stills One or Two charcters only. Everything else kicks it butt. I myself only use Iray on main chacters and do everything else in 3DL or Opengl and composite in GIMP. I prefer the game engine animation tools because I am not a professional and I just want fast and easy results. I purchase my first copy of Iclone here at Daz when they themselves were offering it for sale and that sold me on game engine animation. Yes, I know it is not as good as everything else but game engine animation is what consumers can easly use. Pro's have the deep pockets for commerical solutions and hardware. Consequently, I would like to see something easier than Iclone's 3dexchange for moving Daz assets to game engine platforms for animation or conversly, give us a game engine to play with inside of daz studio.

Only quickie outdoor renders in birthday suit with no actual scene just HDRI gets done that fast for me lol. Indoor is usually closer to an hour or more.

As far as I have noticed, Daz scenes are not really optimized. That OPs 30 minute render in the hands of Iray expert ( not me, I believe in brute force like 2080ti ) probably would drop to 15 minutes, and it would looks just as good. Scenes in games on the other hand are very well optimized, since well, they have to run in real time. Of course 15 minutes vs. 1 second is still a huge difference, but it's the same against pretty much any normal render engine. Maybe with Octane or Cycles you can get 13 minutes vs 1 second, but it's still a huge difference. Don't get me wrong, I love game engines, and I'd love to have 1 sec renders with Iray/Octane/Cycles too, but I'm afraid that's never going to happen.

Also this whole conversation is spinning around archviz. Even I can make decent Unity scenes with just environments, but when I bring in characters, then difference to Iray is like a night and day. Maybe there is some special magic shaders for characters I haven't found yet, but difference in quality in human skin shaders is still really big in my opinion. Just like Eevee for Blender. Great engine, but I just can't get as good results as with Cycles or Iray. I've seen videos of UE digital humans, and reallusion is also advertising with their new skin shaders, but I really haven't have time to test those out yet. Maybe those are really good, and close to Iray quality, but my experiments haven't been very successful yet.

Since the Iray render time the OP has stated is discussed, I`d like to add the 1 second for the Unreal image is likely wrong too.

I don`t know the video the shot was taken from, but there are more impressive raytracing demos running in realtime, so at least 1/30th of a second.

Imo that makes a huge difference. Faster rendering is very desireable, but realtime rendering is a different beast.

About optimization, it`s more the game engines and the way they handle stuff making the difference. Scenes/geometry too, but to a lesser degree.

Since I come from a game background I rather struggle to build stuff in a non-gamey way. ;)

Characters ... ye, thats where the difference is still most noticeable.

But some examples are also quite close, e.g. Senua (Hellblade) looked really good.

Anyway, I don`t see this as an either-or thing, rather as something that should be merged together in the long run.

Progress in fields of realtime and videogame graphics is amazing.

However, i'm happy that i also can appreciate how good game graphics from older years looked too - even it's bery barebones and primitive from "pro 3d artist" viewpoint, but it's always amazing when you know how much they got from this or that hardware power of that time. Also artist work and style matters.

Sometimes i even liked "game look" much more than "cg look", especially if that was "typical 3d max render" look.

Have you actually seen a game texture or model in the last 5 years? Did you know that gaming models can routinely have more polygons than a base Genesis?

Base genesis + hair + clothes and accessoires = still more than average game character has. "In last 5 years" yes, you have game characters that have from 90k to 200k polys, but it's usually main character in a game that doesnt have much characters on screen. While average polycount for chars currently is 40k to 60k polys, perhaps. Still, big progress - in 2005 you often had 7k to 14k, and in 1999 from 500k to 1500k often (unless it's fighting game where you have just 2 chars on screen so can go crazy)

A lot of people made goofy animations with Source thanks to various mods like Garry's Mod. BTW, Valve has a brand new Source 2.0, which they will make tools to use this engine freely available. It does not do ray tracing, but for non-photo real work it could be a fun engine to play with.

And some pretty serious animations too (although that's much more likely to be Source Filmmaker than Garry's Mod, as it has a much more powerful suite of keyframing and animation tools).

Even for still images, I still often use Source Filmmaker, because a low horsepower computer can comfortably handle much more complex scenes. Will they look as good? No. Can I be more creative? Frequently.

(Also, despite some serious rigging limits, you can actually get rigged items into it, whereas DS's import function invariably throws a tantrum when I try to import any model that has bones in it).

It does not do ray tracing, but for non-photo real work it could be a fun engine to play with.

For its age, the original Source engine is unusual in that it does support proper reflections, albeit to a limited extent. No, it's not true raytracing, but you can get perspective correct reflections in surfaces, as compared to many game engines that don't bother at all (leading to many bathrooms with conveniently grubby mirrors). (Fair warning: the following image features an arachne/spider daemon, so anyone who is particularly arachnophobic might not want to click through). This was a silly* image I did around Halloween last year, and all of the reflections on the floor are as rendered in Source. * A standard Halloween gag for me is dressing up my various fantastical characters as not-quite-right characters from popular culture. The previous year had the arachne as Spider Gwen, the AI as Motoko Kusanagi from "Ghost in the Shell", and the half-dragon as the dragonborn from Skyrim.

Actually, although it has its flaws, there are a lot of ways in which Source Filmmaker does hands down beat DS:

- there's an honest to goodness particle system.

- really easy locking and constraining almost anything to anything else. I will frequently lock a model's head rotation to the world in order that I can then adjust the exact angle of their neck or torso without affecting where their head is looking.

- navigating around the scene and manipulating models is an absolute dream in comparison.

With more sophisticated graphical engine behind them, animation tools built on game engines could be a very major consideration in the amateur or even semi-professional markets in the years to come; I think it's far to say that even the Source engine hits a level of animation and shading to be a very capable story telling tool, and it's now fifteen years old. (Half Life 2 was released November 2004).

In 2006 i considered Source looking much more photorealistic than, say, Far Cry or Doom 3, even though doom3 had more advanced tech like soft stencil dynamic shadows and bump stuff

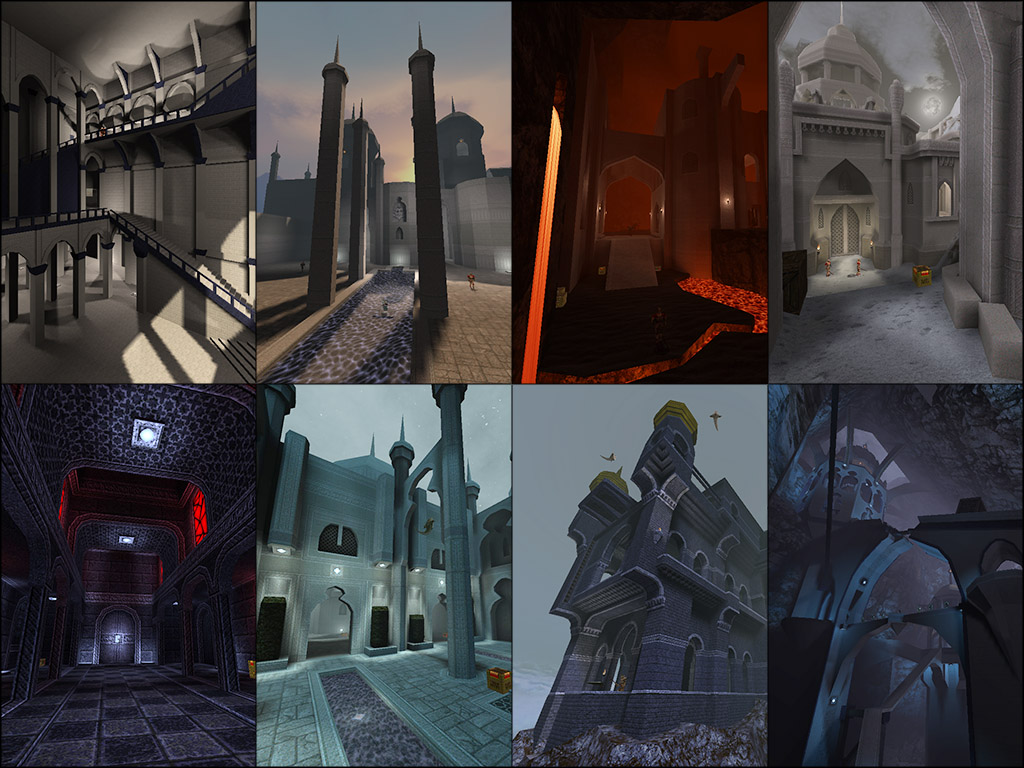

but then again, for me there is beauty even in quake 1 (these are user made levels from modern community, ofc):

a video of an ArchVis sample from Unreal free stuff with the OP's Lydia standing a lot better than my first retarget attempt

Can I ask why you chose to retarget? I'm thinking of an easier workflow, i.e. exporting the animations from Daz. I've uploaded a G2 to Mixamo, downloaded the anim fbx, applied the fbx to a g2 character in Daz, saved the g2 character "pose" and then merged that with a g3 character, exporting that as fbx which I'm loading and applying to the daz rig in unreal. I think I could do this with a lot of other animations without needing to retarget? I just won't be able to use the mannequin rig in unreal.

based on many posts i'm seeying not only here but in others topics, looks like more and more peoples are starting to use unreal or others enginers outside daz, not for game but also animations and rendering, it really make me think which would be really, really good if daz start to notice it and start to give more support it.

based on many posts i'm seeying not only here but in others topics, looks like more and more peoples are starting to use unreal or others enginers outside daz, not for game but also animations and rendering, it really make me think which would be really, really good if daz start to notice it and start to give more support it.

There were a lot of models from Daz 3D available on Unity asset strore previously (Morph 3D),

but they are gone for now.

I do not know the reason for that, but I guess the competition in the game engines stores is huge.

based on many posts i'm seeying not only here but in others topics, looks like more and more peoples are starting to use unreal or others enginers outside daz, not for game but also animations and rendering, it really make me think which would be really, really good if daz start to notice it and start to give more support it.

Agreed but it's "almost" there already via. FBX. There are problems that need fixing though, e.g. eye materials and so on. It seems easier to retarget an FBX from some older Daz formats (Mixamo) to current Genesis models than it is to retarget Daz rig to Unreal Humanoid inside Unreal Engine. That's the big take-away for me from messing about this weekend.

based on many posts i'm seeying not only here but in others topics, looks like more and more peoples are starting to use unreal or others enginers outside daz, not for game but also animations and rendering, it really make me think which would be really, really good if daz start to notice it and start to give more support it.

Agreed but it's "almost" there already via. FBX. There are problems that need fixing though, e.g. eye materials and so on. It seems easier to retarget an FBX from some older Daz formats (Mixamo) to current Genesis models than it is to retarget Daz rig to Unreal Humanoid inside Unreal Engine. That's the big take-away for me from messing about this weekend.

well indeed the eyes mats is aways a little troublesome but once you learn how to deal with it things get a little better, my only real complain is the fact which the insane amount of "mats" you have, for a single character, when exporting to unreal, you have basically a mat for face, a mat for ears, a mat for arms, a mat for torso, another for legs, one for teeth one for mouth, even the eyes you have like 4 or 5 mats just for the eyes, would be cool if you could put everything in a single mat, or at last reduce that insane amount of mats to something less annoying.

About the skeleton, if you follow the instructions i've give, you can get used to it prety fast specially using the program of bones rename, it cut all the work in most then half because you loose more time mapping all the bones than for exemple adjusting the poses or others stuffs, it's look bad at the beginning but later it become pretty easy and fast and you only need the "pose stuff" if you are using unreal animations if you go for daz animations then you can skip the pose stuff.

my big real complain is really on the "setting the material stuff it's really annoying as hell, specially because to be fair the "normal maps or specular maps from daz in many cases are really bad, they don't proper catch the "pores" and things like that then "normally' i need to "redo those maps(i'm using the normalmap online site), then normally i use a external normal map, specular, and ambient occlusion because they get better results another trick is to also change the textures from jpg to TAG(targa) unreal work much better with targa.

it's really a annoying work but when you get used to it you can get really awesome results.

Another problem about unreal which maybe many peoples don't understood is which for exemple for "realistic skin and characters, you don't just use the "base texture, normal, map, specular, ambient occlusion and metalical maps, you have also to add some extra maps like pores maps and do some extra configurations, it's really troublesome but if you manage to proper set the things you can really get stunning results with unreal, on par with daz render, even because unreal does have some support for nvidia shaders if i'm not wrong, the problem is really which to proper set a good material you need a lot of tweak and work and know how to do that inside unreal what we "normally don't know how to do, but once you learn that you can achieve really amazing works.

About the skeleton, if you follow the instructions i've give, you can get used to it prety fast specially using the program of bones rename, it cut all the work in most then half because you loose more time mapping all the bones than for exemple adjusting the poses or others stuffs, it's look bad at the beginning but later it become pretty easy and fast and you only need the "pose stuff" if you are using unreal animations if you go for daz animations then you can skip the pose stuff.

I actually managed to retarget the Humanoid to a G3 character. It works quite well (she's currently running around an arena). There are two problems I see. First her feet aren't flat against the floor. Secondly the basic animations raise the shoulders too high so she looks like of like she's about to pounce, rather than gracefully moving around the scene. The first problem I guess there's a technical solution to. The second I think I need some new animations.

So how do I do this? There are nice, smooth animations in Daz for female walk, high heel walk and so on. Now I've retargetted the rig they aren't going to work (even if I could work out how to get them into unreal unscathed). Getting a tool chain together that works is hell.

About the skeleton, if you follow the instructions i've give, you can get used to it prety fast specially using the program of bones rename, it cut all the work in most then half because you loose more time mapping all the bones than for exemple adjusting the poses or others stuffs, it's look bad at the beginning but later it become pretty easy and fast and you only need the "pose stuff" if you are using unreal animations if you go for daz animations then you can skip the pose stuff.

I actually managed to retarget the Humanoid to a G3 character. It works quite well (she's currently running around an arena). There are two problems I see. First her feet aren't flat against the floor. Secondly the basic animations raise the shoulders too high so she looks like of like she's about to pounce, rather than gracefully moving around the scene. The first problem I guess there's a technical solution to. The second I think I need some new animations.

So how do I do this? There are nice, smooth animations in Daz for female walk, high heel walk and so on. Now I've retargetted the rig they aren't going to work (even if I could work out how to get them into unreal unscathed). Getting a tool chain together that works is hell.

the first must be the "capsule you must adjust the collision capsule check if the character is proper inside the capsule here my exemple:

don't pay attention to head, for some reason it not stopped to doe the "idle animation, the point is to show the capsule postion and size for the character.

about the animation, yeah the "unreal basic animation is "for male" and even that is very weird, you just must use it as base to build you animation blueprint but as soon you get proper animations change it.

about using the animation from daz i really don't know how to export daz animations to unreal, i've tried here and was not able to do, someone which know how to do that, can give a small tutorial???.

About the "retarget aslong they are using the "same skeleton" it's not a issue and by the same i really means that, i means if you used the program to rename the bones then you also need to do the same for the animations to make it use the skeleton inside unreal.

edit: a quick update about how to export daz animations to unreal, it's really simple, select the character you want to animate, in your case will be the g3 character you exported to unreal, then go to the "timeline/animate2 or lite click in the animate folder, then the animation will be there, then right click in that tab in any place which is not the keyframe timeline it will appear a window with some optons choose the bake to studio keyframes option, it will bake the animation into the skeleton, then export the character and makr the "animations to export it.

then after that move the fbx to unreal, then when the export window appear uncheck the "import mesh", option leaving only marked the skeleton mesh, then under that will be have a option where you can choose a "skeleton" to bake that animations, choose the skeleton of the character which you already had exported, then it will export only the animation (not the mesh again).

a video of an ArchVis sample from Unreal free stuff with the OP's Lydia standing a lot better than my first retarget attempt

hei what is the program you use to record videos from the pc and upload to youtube??, sometimes i want to record some videos but never found a good and specially if possible"free" program for that or something close to it.

a video of an ArchVis sample from Unreal free stuff with the OP's Lydia standing a lot better than my first retarget attempt

hei what is the program you use to record videos from the pc and upload to youtube??, sometimes i want to record some videos but never found a good and "free" program for that or something close to it.

Nvidia Shadowplay and Hitfilm express

I play the editor in a new window at 1920x1080 in project preference

on each level blueprint I add esc keyboard brick linked to a Quit game brick so I can close the extra editor window after capture

Another thing about when people compare games to Iray and say how far they need to go...they do not often consider that the game they are comparing to is well, a game. They are optimized to run typically at 60 frames per second, so of course the assets are geared towards that target.

But you do not have to do that! If you are NOT making a video game, then you can go hog wild on the settings and assets. A video game engine CAN use SSS (and many do), in case anybody is wondering. A game engine can use textures much higher than they normally do at the cost of performance. But if all you want to do is capture animation, then you are not so worried about the performance. It could be rendering at 5 frames per second, that would be totally fine and and anybody using Daz Iray will tell you they would KILL for 5 frames per second, LOL. You can adjust many settings in Iray to get faster renders, however it is pretty much impossible to render as fast as gaming engine with Iray. You could place gaming models in there, it just wouldn't matter. Iray is not capable of doing that. Iray's only hope for speed like that is denoising. And I know a lot of you do not like denoising. BTW gaming engines can use denoising as well.

I think a lot of people who are overlooking game engines are not considering that possibility. Game engines are powerful because of how flexible they are. They can now use ray tracing. You can adjust the number of rays cast and the number of bounces (which I know Iray can adjust some as well). Obviously performance takes a hit, but it'll still be faster than Iray. HOWEVER, the game engine can still use its normal game engine tricks on top of ray tracing. You CANNOT do that with Iray. You cannot use any fake lighting in Iray. The closest you may get are ghost lights. So game engines allow you to mix and match both, you can do this for performance reasons, or you can do this as a stylistic choice. The artistic choice is more important and is a major advantage for gaming engines.

I think we all know how tough it is to render a fire in Iray. Face it, glowing embers, sparks, and magical effects all suck in Iray. But a game engine is built for these effects, thus a game engine can combine these effects with ray traced physically based lighting at the same time without needing photoshop to add these effects in post . And they can do this all very fast. Iray has no method to replicate this.

That will be one of the biggest reasons why gaming engines will become ever more popular among animators, because they have that choice and ability to combine those effects with ray tracing and PBR materials. So you have this incredible flexibility combined with the speed of the rendering, all that is needed are better tools for the process. That is why I keep saying that whoever gets out the best software package that takes advantage of a game engine to animate will be in great shape.

And Epic is not screwing around. They bought Quixel and who knows what they will do next. It is obvious they are not just interested in games. Epic wants to conquer Hollywood, too. They will not sit still for long and they will keep on making big moves like Quixel. Because of how aggressive they are, I would absolutely not bet against them. Unity has some good stuff, too, but they don't have the financial muscle Epic has to do the things that Epic is doing.

Another thing about when people compare games to Iray and say how far they need to go...they do not often consider that the game they are comparing to is well, a game. They are optimized to run typically at 60 frames per second, so of course the assets are geared towards that target.

But you do not have to do that! If you are NOT making a video game, then you can go hog wild on the settings and assets. A video game engine CAN use SSS (and many do), in case anybody is wondering. A game engine can use textures much higher than they normally do at the cost of performance. But if all you want to do is capture animation, then you are not so worried about the performance. It could be rendering at 5 frames per second, that would be totally fine and and anybody using Daz Iray will tell you they would KILL for 5 frames per second, LOL. You can adjust many settings in Iray to get faster renders, however it is pretty much impossible to render as fast as gaming engine with Iray. You could place gaming models in there, it just wouldn't matter. Iray is not capable of doing that. Iray's only hope for speed like that is denoising. And I know a lot of you do not like denoising. BTW gaming engines can use denoising as well.

Here is how to activate Iray's built-in alternate graphics rendering mode purpose built for achieving high quality game engine level graphics in relative realtime:

At minimum you will need to play around with the rest of the options under Editor tab > Optimization in both places (changing Render Mode to "Interactive" completely changes/extends what this menu has to offer) to get workable results. But if your objective is to get animation adequate (ie. UE-level) graphics out of Iray and render speed rather than absolute photorealism is a priority, tweaking up this mode is the way to go - not attempting to tweak down Photoreal (since Photoreal inherently gives you fewer options.)

As to how rendering performance actually does stack up... Using the Daz scene set originally pictured in this thread as an example (which, by the way, is a terrible choice for attempting to make a visual comparison between modern day Iray and UE since it doesn't include Iray native shaders or lighting) a Titan RTX (so roughly a 2080Ti ) gets you around 11 frames per second in Iray preview for a 1080p sized window with 115% system-wide screen scaling (or around 3fps with the window maxed on a 4k screen.) Which is easily determined by checking the log file (which should look something like this if you've got things configured properly btw):

2019-12-09 15:53:44.172 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.258 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0169 in 0.085958s, 11.6fps (internal: 0.079671s, 12.6fps, overhead 7.3%)2019-12-09 15:53:44.262 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.348 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0170 in 0.085508s, 11.7fps (internal: 0.078924s, 12.7fps, overhead 7.7%)2019-12-09 15:53:44.349 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.436 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0171 in 0.087870s, 11.4fps (internal: 0.080833s, 12.4fps, overhead 8.0%)

Another thing about when people compare games to Iray and say how far they need to go...they do not often consider that the game they are comparing to is well, a game. They are optimized to run typically at 60 frames per second, so of course the assets are geared towards that target.

But you do not have to do that! If you are NOT making a video game, then you can go hog wild on the settings and assets. A video game engine CAN use SSS (and many do), in case anybody is wondering. A game engine can use textures much higher than they normally do at the cost of performance. But if all you want to do is capture animation, then you are not so worried about the performance. It could be rendering at 5 frames per second, that would be totally fine and and anybody using Daz Iray will tell you they would KILL for 5 frames per second, LOL. You can adjust many settings in Iray to get faster renders, however it is pretty much impossible to render as fast as gaming engine with Iray. You could place gaming models in there, it just wouldn't matter. Iray is not capable of doing that. Iray's only hope for speed like that is denoising. And I know a lot of you do not like denoising. BTW gaming engines can use denoising as well.

Here is how to activate Iray's built-in alternate graphics rendering mode purpose built for achieving high quality game engine level graphics in relative realtime:

At minimum you will need to play around with the rest of the options under Editor tab > Optimization in both places (changing Render Mode to "Interactive" completely changes/extends what this menu has to offer) to get workable results. But if your objective is to get animation adequate (ie. UE-level) graphics out of Iray and render speed rather than absolute photorealism is a priority, tweaking up this mode is the way to go - not attempting to tweak down Photoreal (since Photoreal inherently gives you fewer options.)

As to how rendering performance actually does stack up... Using the Daz scene set originally pictured in this thread as an example (which, by the way, is a terrible choice for attempting to make a visual comparison between modern day Iray and UE since it doesn't include Iray native shaders or lighting) a Titan RTX (so roughly a 2080Ti ) gets you around 11 frames per second in Iray preview for a 1080p sized window with 115% system-wide screen scaling (or around 3fps with the window maxed on a 4k screen.) Which is easily determined by checking the log file (which should look something like this if you've got things configured properly btw):

2019-12-09 15:53:44.172 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.258 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0169 in 0.085958s, 11.6fps (internal: 0.079671s, 12.6fps, overhead 7.3%)2019-12-09 15:53:44.262 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.348 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0170 in 0.085508s, 11.7fps (internal: 0.078924s, 12.7fps, overhead 7.7%)2019-12-09 15:53:44.349 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.436 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0171 in 0.087870s, 11.4fps (internal: 0.080833s, 12.4fps, overhead 8.0%)

Another thing about when people compare games to Iray and say how far they need to go...they do not often consider that the game they are comparing to is well, a game. They are optimized to run typically at 60 frames per second, so of course the assets are geared towards that target.

But you do not have to do that! If you are NOT making a video game, then you can go hog wild on the settings and assets. A video game engine CAN use SSS (and many do), in case anybody is wondering. A game engine can use textures much higher than they normally do at the cost of performance. But if all you want to do is capture animation, then you are not so worried about the performance. It could be rendering at 5 frames per second, that would be totally fine and and anybody using Daz Iray will tell you they would KILL for 5 frames per second, LOL. You can adjust many settings in Iray to get faster renders, however it is pretty much impossible to render as fast as gaming engine with Iray. You could place gaming models in there, it just wouldn't matter. Iray is not capable of doing that. Iray's only hope for speed like that is denoising. And I know a lot of you do not like denoising. BTW gaming engines can use denoising as well.

Here is how to activate Iray's built-in alternate graphics rendering mode purpose built for achieving high quality game engine level graphics in relative realtime:

At minimum you will need to play around with the rest of the options under Editor tab > Optimization in both places (changing Render Mode to "Interactive" completely changes/extends what this menu has to offer) to get workable results. But if your objective is to get animation adequate (ie. UE-level) graphics out of Iray and render speed rather than absolute photorealism is a priority, tweaking up this mode is the way to go - not attempting to tweak down Photoreal (since Photoreal inherently gives you fewer options.)

As to how rendering performance actually does stack up... Using the Daz scene set originally pictured in this thread as an example (which, by the way, is a terrible choice for attempting to make a visual comparison between modern day Iray and UE since it doesn't include Iray native shaders or lighting) a Titan RTX (so roughly a 2080Ti ) gets you around 11 frames per second in Iray preview for a 1080p sized window with 115% system-wide screen scaling (or around 3fps with the window maxed on a 4k screen.) Which is easily determined by checking the log file (which should look something like this if you've got things configured properly btw):

2019-12-09 15:53:44.172 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.258 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0169 in 0.085958s, 11.6fps (internal: 0.079671s, 12.6fps, overhead 7.3%)2019-12-09 15:53:44.262 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.348 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0170 in 0.085508s, 11.7fps (internal: 0.078924s, 12.7fps, overhead 7.7%)2019-12-09 15:53:44.349 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : Scene graph manager init took 0.000s2019-12-09 15:53:44.436 Iray [INFO] - IRT:RENDER :: 1.0 IRT rend info : rendered frame 0171 in 0.087870s, 11.4fps (internal: 0.080833s, 12.4fps, overhead 8.0%)

I wondered why that hadn't come up.

I just followed those instructions - good to know that, by the way. However, I don't like the result - a bit too toonish for my taste but, of course, it is all subjective. Some people might prefer that look. There's probably a host of adjustments that can be made to get closer to photoreal in interactive mode so I'll spend a bit more time with it when I have a bit more time.

The following is an Unreal Engine render, in which the following image takes one second to render:

The following is a DAZ STUDIO / NVIDIA IRAY RENDER, which can take up to 30 minutes or more:

What's going on here? I understand that Unreal Engine assets are optimized, but still, ONE second render vs 30 minute renders? Something is up guys. Something is SEVERELY WRONG here.

If UNITY and Unreal Engine can produce high quality renders in less than a second, then there's no reason why NVIDIA IRAY can't adopt a mode, in which it operates at the same level as UNITY or Unreal ENgine, just for the sake of faster renders, for making animations for example.

I think companies like Unity & Unreal Engine shoudl get into the rendering business and compete against DAZ Studio, that would probably make DAZ Studio come up with a fast rendering solution.

Well, IMO, whats "wrong" with it is that UE isnt actually rendering everything. OK, stuff that changes, like specularity and reflections, may be real time RTX hardware driven, but the rest of it? Do light rays bounce around to infinity in those UE 4 demonstrations? I think not. Its prebaked, preset light, props and so on, so instead of rendering, stuff just loads when its needed and advancement of hardware and game engine makes it appear more realistic.

So DAZ Studio equivalent is not IRay rendering, its loading some apartment into the scene and "walking" through it in view port.

Here's the use of Iray interactive in iclone. Doesn't look bad. Probably depends on your settings, etc.

It is not just about speed.... people

IRay was a massive FAILURE in the iclone community because it does not support the smoke,fire and other realtime particle effects that Iclone users had come to depend on for their animations...Unreal can do these types of things and is far more versatile for film-making useage beyond making BORING brute force arch -vis ,portraight /pinup& consumer product renders.

Personally I think UE4 it's awesome and a platform that will be increasingly popular. Technology moves fast into real time visualization and interactive 3D; as soon as UE4 (but also Unity) will benefit of better FBX and rigging implementation, they will be choice for a vast majority of users, from hobbysts to professionals. As of yesterday, latest UE4 release (4.24) shows the first integration of dynamic hair and fur, physically-based sky atmosphere and lot more. Their development is relentless and they bring in great features at every new release. I'm very thrilled about these new features and possibilities.

Comments

No need to argue Daz Iray is good for main characters in stills One or Two charcters only. Everything else kicks it butt. I myself only use Iray on main chacters and do everything else in 3DL or Opengl and composite in GIMP. I prefer the game engine animation tools because I am not a professional and I just want fast and easy results. I purchase my first copy of Iclone here at Daz when they themselves were offering it for sale and that sold me on game engine animation. Yes, I know it is not as good as everything else but game engine animation is what consumers can easly use. Pro's have the deep pockets for commerical solutions and hardware. Consequently, I would like to see something easier than Iclone's 3dexchange for moving Daz assets to game engine platforms for animation or conversly, give us a game engine to play with inside of daz studio.

30 minutes?! I would run through the streets naked if I could get an Iray render completed in 30 minutes!

Only quickie outdoor renders in birthday suit with no actual scene just HDRI gets done that fast for me lol. Indoor is usually closer to an hour or more.

Since the Iray render time the OP has stated is discussed, I`d like to add the 1 second for the Unreal image is likely wrong too.

I don`t know the video the shot was taken from, but there are more impressive raytracing demos running in realtime, so at least 1/30th of a second.

Imo that makes a huge difference. Faster rendering is very desireable, but realtime rendering is a different beast.

About optimization, it`s more the game engines and the way they handle stuff making the difference. Scenes/geometry too, but to a lesser degree.

Since I come from a game background I rather struggle to build stuff in a non-gamey way. ;)

Characters ... ye, thats where the difference is still most noticeable.

But some examples are also quite close, e.g. Senua (Hellblade) looked really good.

Anyway, I don`t see this as an either-or thing, rather as something that should be merged together in the long run.

the video I googled the title

download for scene demo cooked as a game

https://darulsolutions.com/Downloads

sadly as it's a cooked game all you can do is explore it so I cannot export an obj to test in DAZ studio

Progress in fields of realtime and videogame graphics is amazing.

However, i'm happy that i also can appreciate how good game graphics from older years looked too - even it's bery barebones and primitive from "pro 3d artist" viewpoint, but it's always amazing when you know how much they got from this or that hardware power of that time. Also artist work and style matters.

Sometimes i even liked "game look" much more than "cg look", especially if that was "typical 3d max render" look.

p.s.

Base genesis + hair + clothes and accessoires = still more than average game character has. "In last 5 years" yes, you have game characters that have from 90k to 200k polys, but it's usually main character in a game that doesnt have much characters on screen. While average polycount for chars currently is 40k to 60k polys, perhaps. Still, big progress - in 2005 you often had 7k to 14k, and in 1999 from 500k to 1500k often (unless it's fighting game where you have just 2 chars on screen so can go crazy)

In 2006 i considered Source looking much more photorealistic than, say, Far Cry or Doom 3, even though doom3 had more advanced tech like soft stencil dynamic shadows and bump stuff

but then again, for me there is beauty even in quake 1 (these are user made levels from modern community, ofc):

a video of an ArchVis sample from Unreal free stuff with the OP's Lydia standing a lot better than my first retarget attempt

Can I ask why you chose to retarget? I'm thinking of an easier workflow, i.e. exporting the animations from Daz. I've uploaded a G2 to Mixamo, downloaded the anim fbx, applied the fbx to a g2 character in Daz, saved the g2 character "pose" and then merged that with a g3 character, exporting that as fbx which I'm loading and applying to the daz rig in unreal. I think I could do this with a lot of other animations without needing to retarget? I just won't be able to use the mannequin rig in unreal.

I do both

I put loop animated figures in too, I just wanted an interactive one

based on many posts i'm seeying not only here but in others topics, looks like more and more peoples are starting to use unreal or others enginers outside daz, not for game but also animations and rendering, it really make me think which would be really, really good if daz start to notice it and start to give more support it.

There were a lot of models from Daz 3D available on Unity asset strore previously (Morph 3D),

but they are gone for now.

I do not know the reason for that, but I guess the competition in the game engines stores is huge.

Agreed but it's "almost" there already via. FBX. There are problems that need fixing though, e.g. eye materials and so on. It seems easier to retarget an FBX from some older Daz formats (Mixamo) to current Genesis models than it is to retarget Daz rig to Unreal Humanoid inside Unreal Engine. That's the big take-away for me from messing about this weekend.

well indeed the eyes mats is aways a little troublesome but once you learn how to deal with it things get a little better, my only real complain is the fact which the insane amount of "mats" you have, for a single character, when exporting to unreal, you have basically a mat for face, a mat for ears, a mat for arms, a mat for torso, another for legs, one for teeth one for mouth, even the eyes you have like 4 or 5 mats just for the eyes, would be cool if you could put everything in a single mat, or at last reduce that insane amount of mats to something less annoying.

About the skeleton, if you follow the instructions i've give, you can get used to it prety fast specially using the program of bones rename, it cut all the work in most then half because you loose more time mapping all the bones than for exemple adjusting the poses or others stuffs, it's look bad at the beginning but later it become pretty easy and fast and you only need the "pose stuff" if you are using unreal animations if you go for daz animations then you can skip the pose stuff.

my big real complain is really on the "setting the material stuff it's really annoying as hell, specially because to be fair the "normal maps or specular maps from daz in many cases are really bad, they don't proper catch the "pores" and things like that then "normally' i need to "redo those maps(i'm using the normalmap online site), then normally i use a external normal map, specular, and ambient occlusion because they get better results another trick is to also change the textures from jpg to TAG(targa) unreal work much better with targa.

it's really a annoying work but when you get used to it you can get really awesome results.

Another problem about unreal which maybe many peoples don't understood is which for exemple for "realistic skin and characters, you don't just use the "base texture, normal, map, specular, ambient occlusion and metalical maps, you have also to add some extra maps like pores maps and do some extra configurations, it's really troublesome but if you manage to proper set the things you can really get stunning results with unreal, on par with daz render, even because unreal does have some support for nvidia shaders if i'm not wrong, the problem is really which to proper set a good material you need a lot of tweak and work and know how to do that inside unreal what we "normally don't know how to do, but once you learn that you can achieve really amazing works.

Hmmm, I'm sure this could be arranged.

I actually managed to retarget the Humanoid to a G3 character. It works quite well (she's currently running around an arena). There are two problems I see. First her feet aren't flat against the floor. Secondly the basic animations raise the shoulders too high so she looks like of like she's about to pounce, rather than gracefully moving around the scene. The first problem I guess there's a technical solution to. The second I think I need some new animations.

So how do I do this? There are nice, smooth animations in Daz for female walk, high heel walk and so on. Now I've retargetted the rig they aren't going to work (even if I could work out how to get them into unreal unscathed). Getting a tool chain together that works is hell.

the first must be the "capsule you must adjust the collision capsule check if the character is proper inside the capsule here my exemple:

don't pay attention to head, for some reason it not stopped to doe the "idle animation, the point is to show the capsule postion and size for the character.

about the animation, yeah the "unreal basic animation is "for male" and even that is very weird, you just must use it as base to build you animation blueprint but as soon you get proper animations change it.

about using the animation from daz i really don't know how to export daz animations to unreal, i've tried here and was not able to do, someone which know how to do that, can give a small tutorial???.

About the "retarget aslong they are using the "same skeleton" it's not a issue and by the same i really means that, i means if you used the program to rename the bones then you also need to do the same for the animations to make it use the skeleton inside unreal.

edit: a quick update about how to export daz animations to unreal, it's really simple, select the character you want to animate, in your case will be the g3 character you exported to unreal, then go to the "timeline/animate2 or lite click in the animate folder, then the animation will be there, then right click in that tab in any place which is not the keyframe timeline it will appear a window with some optons choose the bake to studio keyframes option, it will bake the animation into the skeleton, then export the character and makr the "animations to export it.

then after that move the fbx to unreal, then when the export window appear uncheck the "import mesh", option leaving only marked the skeleton mesh, then under that will be have a option where you can choose a "skeleton" to bake that animations, choose the skeleton of the character which you already had exported, then it will export only the animation (not the mesh again).

be happy ^^

hei what is the program you use to record videos from the pc and upload to youtube??, sometimes i want to record some videos but never found a good and specially if possible"free" program for that or something close to it.

Nvidia Shadowplay and Hitfilm express

I play the editor in a new window at 1920x1080 in project preference

on each level blueprint I add esc keyboard brick linked to a Quit game brick so I can close the extra editor window after capture

Another thing about when people compare games to Iray and say how far they need to go...they do not often consider that the game they are comparing to is well, a game. They are optimized to run typically at 60 frames per second, so of course the assets are geared towards that target.

But you do not have to do that! If you are NOT making a video game, then you can go hog wild on the settings and assets. A video game engine CAN use SSS (and many do), in case anybody is wondering. A game engine can use textures much higher than they normally do at the cost of performance. But if all you want to do is capture animation, then you are not so worried about the performance. It could be rendering at 5 frames per second, that would be totally fine and and anybody using Daz Iray will tell you they would KILL for 5 frames per second, LOL. You can adjust many settings in Iray to get faster renders, however it is pretty much impossible to render as fast as gaming engine with Iray. You could place gaming models in there, it just wouldn't matter. Iray is not capable of doing that. Iray's only hope for speed like that is denoising. And I know a lot of you do not like denoising. BTW gaming engines can use denoising as well.

I think a lot of people who are overlooking game engines are not considering that possibility. Game engines are powerful because of how flexible they are. They can now use ray tracing. You can adjust the number of rays cast and the number of bounces (which I know Iray can adjust some as well). Obviously performance takes a hit, but it'll still be faster than Iray. HOWEVER, the game engine can still use its normal game engine tricks on top of ray tracing. You CANNOT do that with Iray. You cannot use any fake lighting in Iray. The closest you may get are ghost lights. So game engines allow you to mix and match both, you can do this for performance reasons, or you can do this as a stylistic choice. The artistic choice is more important and is a major advantage for gaming engines.

I think we all know how tough it is to render a fire in Iray. Face it, glowing embers, sparks, and magical effects all suck in Iray. But a game engine is built for these effects, thus a game engine can combine these effects with ray traced physically based lighting at the same time without needing photoshop to add these effects in post . And they can do this all very fast. Iray has no method to replicate this.

That will be one of the biggest reasons why gaming engines will become ever more popular among animators, because they have that choice and ability to combine those effects with ray tracing and PBR materials. So you have this incredible flexibility combined with the speed of the rendering, all that is needed are better tools for the process. That is why I keep saying that whoever gets out the best software package that takes advantage of a game engine to animate will be in great shape.

And Epic is not screwing around. They bought Quixel and who knows what they will do next. It is obvious they are not just interested in games. Epic wants to conquer Hollywood, too. They will not sit still for long and they will keep on making big moves like Quixel. Because of how aggressive they are, I would absolutely not bet against them. Unity has some good stuff, too, but they don't have the financial muscle Epic has to do the things that Epic is doing.

my iray test with original UE4 at end

I used DAZ shaders on most some I swapped out maps if could

as materials don't load and not many maps exported in a useable form anyway

the same set in Twinmotion

1/30th of a second. 30 frames every second. Without lag. Game engines are awesome.

here a good news which can be relevant to this topic

https://www.youtube.com/watch?v=GGW-TrnGgK8

as you see unreal is really moving toward a lot others things than just game specially for rendering and how they are improving a lot.

Here is how to activate Iray's built-in alternate graphics rendering mode purpose built for achieving high quality game engine level graphics in relative realtime:

At minimum you will need to play around with the rest of the options under Editor tab > Optimization in both places (changing Render Mode to "Interactive" completely changes/extends what this menu has to offer) to get workable results. But if your objective is to get animation adequate (ie. UE-level) graphics out of Iray and render speed rather than absolute photorealism is a priority, tweaking up this mode is the way to go - not attempting to tweak down Photoreal (since Photoreal inherently gives you fewer options.)

As to how rendering performance actually does stack up... Using the Daz scene set originally pictured in this thread as an example (which, by the way, is a terrible choice for attempting to make a visual comparison between modern day Iray and UE since it doesn't include Iray native shaders or lighting) a Titan RTX (so roughly a 2080Ti ) gets you around 11 frames per second in Iray preview for a 1080p sized window with 115% system-wide screen scaling (or around 3fps with the window maxed on a 4k screen.) Which is easily determined by checking the log file (which should look something like this if you've got things configured properly btw):

I wondered why that hadn't come up.

I just followed those instructions - good to know that, by the way. However, I don't like the result - a bit too toonish for my taste but, of course, it is all subjective. Some people might prefer that look. There's probably a host of adjustments that can be made to get closer to photoreal in interactive mode so I'll spend a bit more time with it when I have a bit more time.

Here's the use of Iray interactive in iclone. Doesn't look bad. Probably depends on your settings, etc.

Well, IMO, whats "wrong" with it is that UE isnt actually rendering everything. OK, stuff that changes, like specularity and reflections, may be real time RTX hardware driven, but the rest of it? Do light rays bounce around to infinity in those UE 4 demonstrations? I think not. Its prebaked, preset light, props and so on, so instead of rendering, stuff just loads when its needed and advancement of hardware and game engine makes it appear more realistic.

So DAZ Studio equivalent is not IRay rendering, its loading some apartment into the scene and "walking" through it in view port.

Personally I think UE4 it's awesome and a platform that will be increasingly popular. Technology moves fast into real time visualization and interactive 3D; as soon as UE4 (but also Unity) will benefit of better FBX and rigging implementation, they will be choice for a vast majority of users, from hobbysts to professionals. As of yesterday, latest UE4 release (4.24) shows the first integration of dynamic hair and fur, physically-based sky atmosphere and lot more. Their development is relentless and they bring in great features at every new release. I'm very thrilled about these new features and possibilities.