Adding to Cart…

Licensing Agreement | Terms of Service | Privacy Policy | EULA

© 2024 Daz Productions Inc. All Rights Reserved.You currently have no notifications.

Licensing Agreement | Terms of Service | Privacy Policy | EULA

© 2024 Daz Productions Inc. All Rights Reserved.

Comments

As I sip my first cup of coffee and think about all the times I've been told "I'm the less than 1% that wants" X, Y, or Z, and read through some comments.

Yep, I am the one percent that cares more about the performance of a computer instead of how much it looks like a cheap plastic toy from a dollar store, lol. I don't need to look that far to see proof of that, as I take a quick look down memory lane, lol.

I actually stopped buying some brands and models of memory sticks due to that, even tho the other brands stepped away from thermal pads and heat spreader clips in favor of styrofoam double sided sticky tape that I'm sure is more thermal insulator than conductor to hold on the for show only designer plaques. Over the past ten years, I've only seen one memory kit that I would even consider at all, and it's the wrong stuff for what I need.

Because that is an honest real heat spreader with actual cooling fins, not some cheap glow in the dark plastic toy looking thing. If TeamGroup put that heat spreader (with the copper, not aluminum or black faceplate) on a 16GB stick of DDR4-3200 CL14-14-14-1T, I would take 4 of them in a heartbeat. Unfortunately, that stuff is reserved for Ln2 overclocking Max MHz only stuff in the smallest memory sizes that can be assembled on DDR4. I would need to underclock the hell out of them if they even Posted at all in my AM4 system at stock for Ryzen's IMC 2133MHz to 3200MHz memory clocks. So, yeah, My interest in the new memory kits is lacking at best, At least there are some other trends that I am a tad more excited to hear more about.

Good morning Dave, how are you feeling today?

)

)

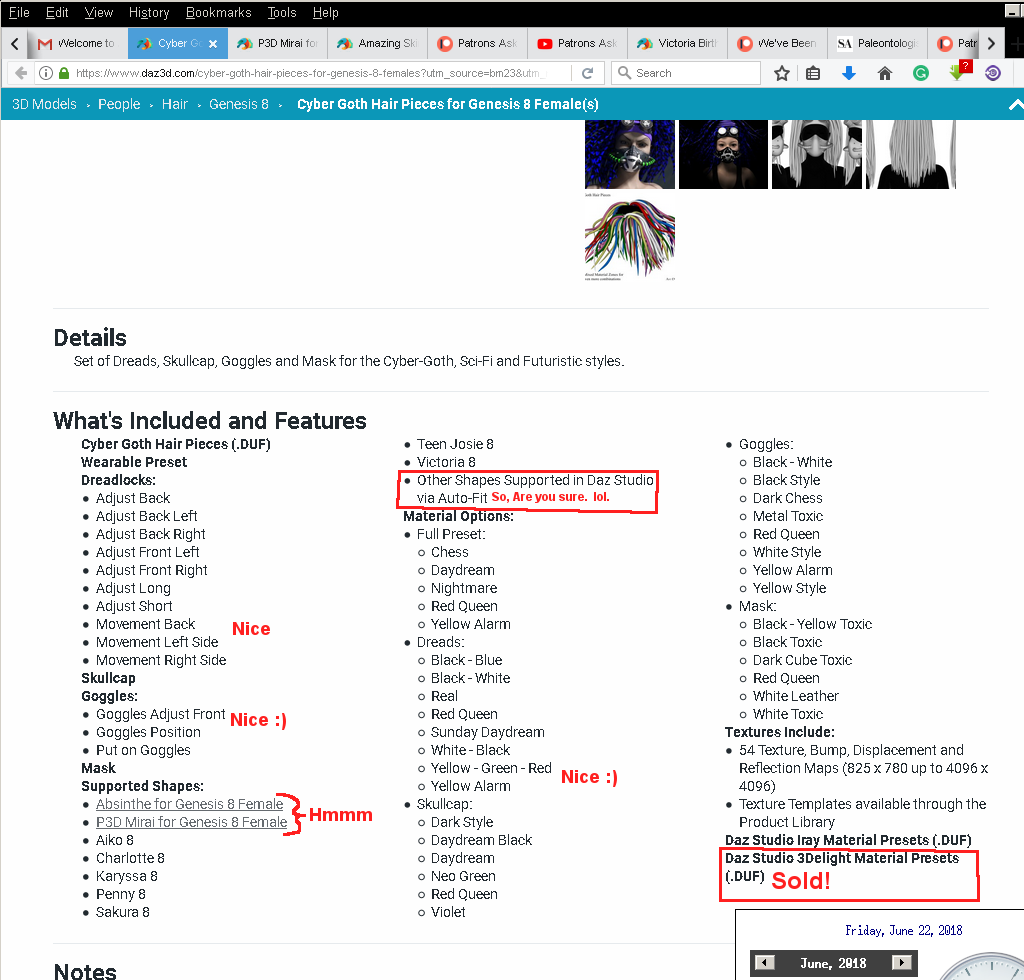

Ah, ok, it's been a week since I've needed that to get some music for a wedding, now that window needs to go away and stop booting up with windows, somehow. I've also been dragging my feet way too much the past week. So I have a few notes, and then some thoughts on the state of motherboards. First up, a new version of some tech dreds (for gen 8) that looks kind of cool at first. I kind of didn't look at the store in a while and missed a few things that I will get in time (3rd of Jul ish).

That stuff apparently does have 3delight presets, and that's a good thing for me, even if it's only a start to work with for getting the colors down, instead of needing to hunt down a dozen maps hidden off in the depths of the content library somewhere after forcing a 3delight shader onto an Iray preset like other products around. The lack of an over elf ear option is a bit of a letdown, tho not as much I guess, and why I really liked the first version of the Tech dreads for Generation6 (G2F) back in the day. They are not really the same style other than the welding goggles and respirator.

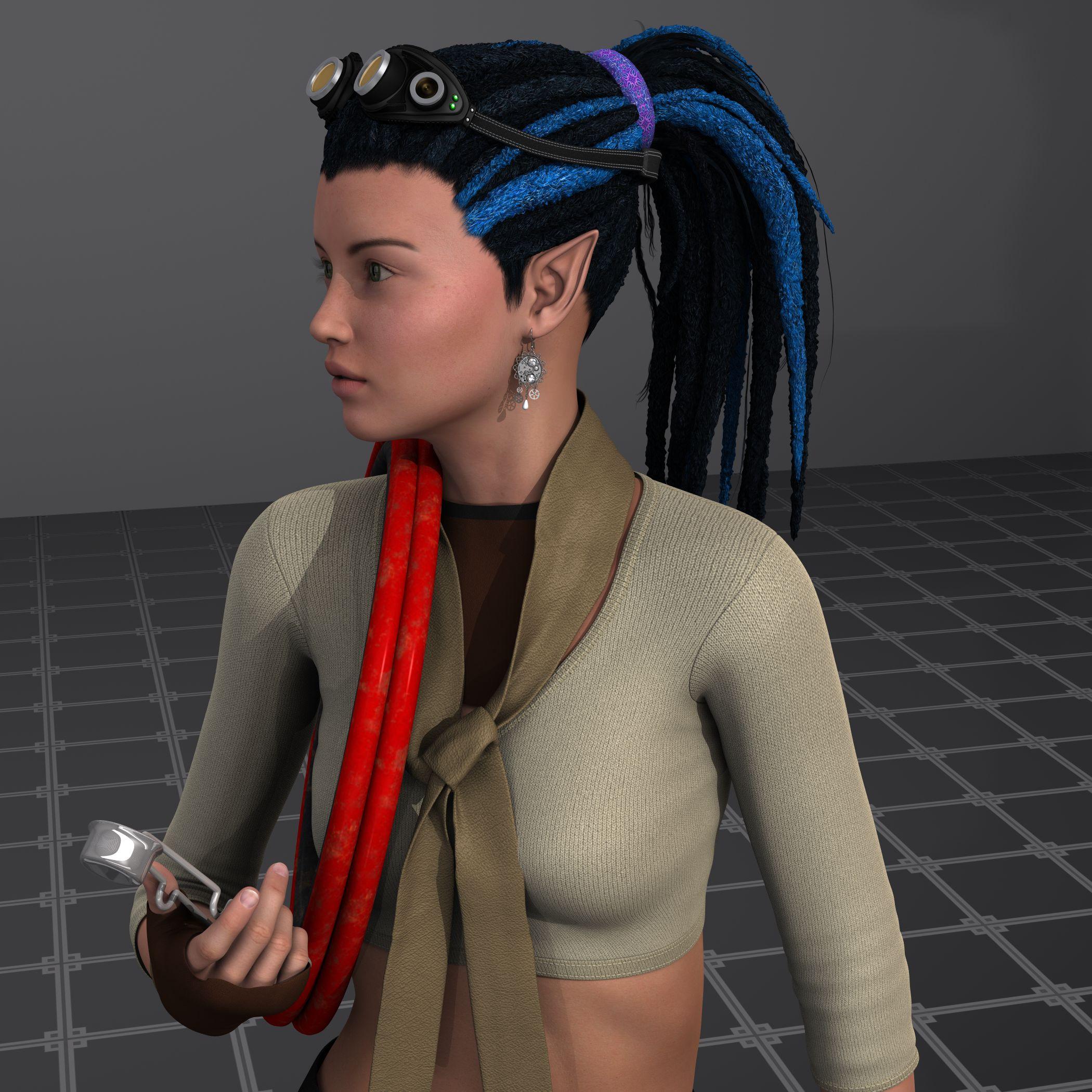

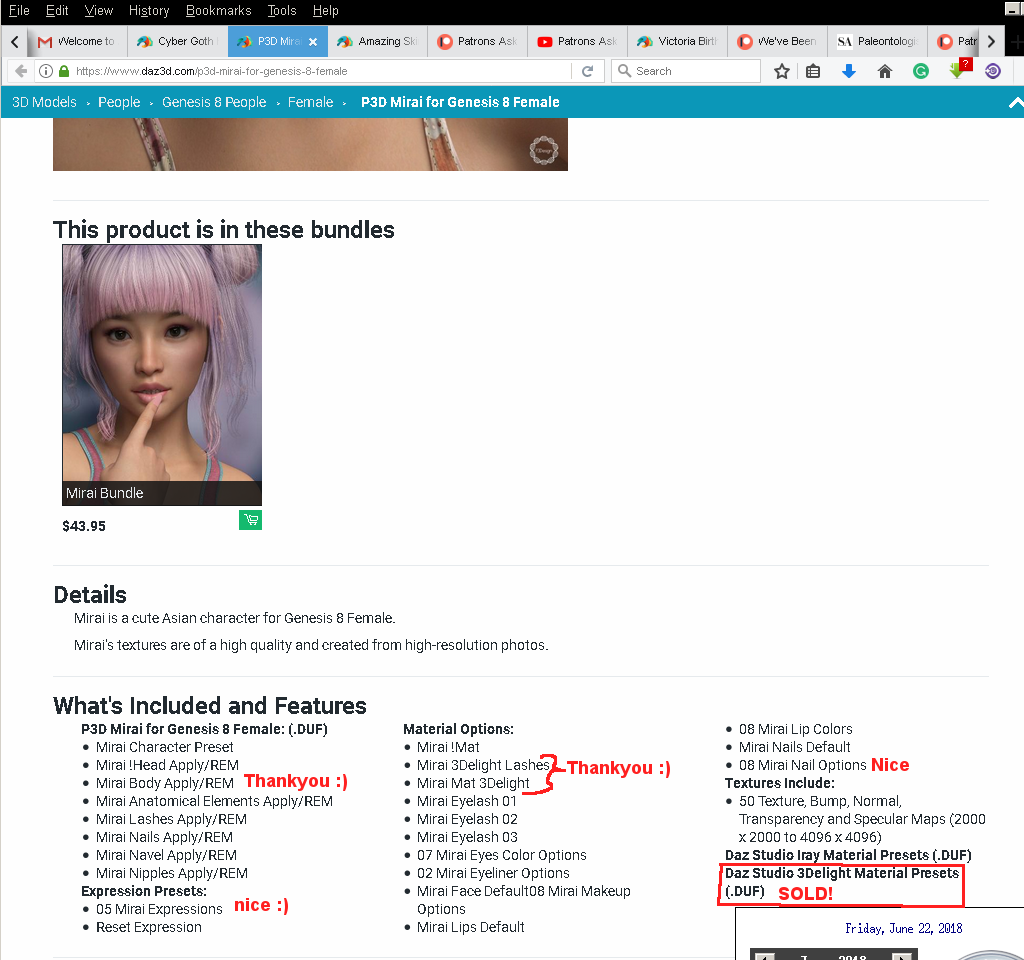

I really like the older style of x-tech dreads for G2F. The render times are really good, and it works flawlessly with any Elf ears. I don't know if the new Gen8 product will be as versatile or not, However, I will give it a try. And Fw Courtney probably will not work with gen8 stuff as I discovered when I tried to put gen8 goth stuff on Fw Aiyana, lol. So I think I will try P3D Mira, not exactly the same as Courtney, tho I may be able to do something there (far closer than looking for a gen8 version of Wachiwi, lol. Thoughts on that pending a cup of coffee and some renders).

Again, 3delight has me sold, I just didn't have the play funds this month to get all the stuff in my want list. (goes to get a link to the store page...

Hu, what, maybe I do have that, ok I need a cup of coffee and look at what I did get, I've been running a few different directions of late, lol. Well, I was going to say I'll get that as well on the third, tho I guess I already have that somewhere in DIM.

I also may have been thinking of another PA for what I was going to mention here when looking through past promos the last few weeks, about not wanting to make 3delight shaders and Iray shaders for new products, that I do understand. Daz is fairly persistent that all new products 'must' have Iray presets or your not likely to even get the product into the daz store, and making two different surface shader preset sets of shaders for two completely different render engines is a major pain that isn't really worth the RIO put into the product. I know it wasn't P3D that I had asked about an eyebrow preset mistake (someone else), and the PA had indicated that it was an early mistake and they had less interest in making 3delight presets for new products, and I don't blame them at all, that is a lot of work for a fraction of less than 20usd per sale. (time for a cup of coffee and to skim over some stuff, as I forget what was what in regard to other stuff)

A state Of Affordable VRMs For Production machine Motherboards

So, to save you some time, I really feel there are only two motherboards that would survive more than a year of 3delight, Marvelous Designer, Z-brush, Blender, etc, production work. One being the Gigabyte "X470 AORUS Gaming 7 WIFI" (for the AMD R7 2700x CPU), even tho the VRM heatsink is more of a marketing gimmick than useful for the overkill VRM and an insult to all the lower end Gigabyte boards that actually need the cooling for production work. The other being the ASUS "ROG Crosshair VII Hero AMD Ryzen 2 AM4" (for the AMD R7 2700x CPU). I've yet to see any Motherboard VRM breakdowns for z370 (Intel i7 8700k) that I feel comfortable saying will survive more than 5k-hours (208 days).

I have for some time now felt that the state of early AM4 boards was headed the same way as the AM3+ boards that had VRMs barley able to deliver the minimum 125 watts to the FX8xxx CPUs labeled as 140 watts capable that didn't stand a chance when the 220 watt FX9590 came out. I hadn't really looked at the Thread ripper boards in depth because I was not impressed with any of the "VRM fission accessories". I really had the impression that motherboard makers didn't give an "Intel Thermal Paste" about the VRMs on HED thread ripper workstation class systems, and it looks like it may be worse than I first thought. And the 2700x isn't as bad, tho it's more than the first Zen CPUs.

Gaming workloads do NOT compare to production apps, not by a long shot. 3delight uses SSE2 that slams the CPU and CPU VRM just as hard as Prime95, and Blender uses AVX that obliterates the CPU VRM as badly as Prime95AVX, despite all the claims that Prime95 is not a 'proper' "Real world test" by so many in comment sections of vids and on forums that I have seen around the internet. At least Prime95 will not interfere with background tasks like I've experienced with 3delight that will stop video playback dead in its tracks and cause mp3's to skip worse than a CD used to clean an 80 grit belt sander belt.

I know first hand, that the render times on the GN Monkey head test are spot on with 3delight renders times I get on most renders without AoA on HD figures, Both for the FX8350/8370 on older charts (about 90 minutes), and the R7 1700 in the GN R7 build. Adding AoA on HD figures is an additional 30 to over 40 minutes of render time for the Subsurface compute "Face Plant time" to the former times.

Intel i7 8700k (z370), well, despite that it clocks well and is ok for blender and 3delight on the benchmark charts for the CPU, I have significant doubts about all of the motherboards I've seen so far. BZ has looked at one board that may be ok, however, I would not expect the VRM caps to survive a few years of production work in a render/production build. Also, given the lack of memory channels may be a major showstopper for those looking to get 64+GB of memory on the EVGA Z370 Micro, the only board I feel won't be dead within 208 days of rendering nonstop. And this is not a bashing of Intel, this is literally a case of none of the motherboards I've seen so far are able to do it. (EDIT, the "EVGA Z370 Classified K" may be a possibility, after a few hours of digging I haven't been able to find any info on what makes up the VRMs to determine if it's good or a cosmic infernal heat engine, however, the blocks they put on the VRMs do have something resembling surface area for that long lost word, "cooling".)

x299 is NOT z370. The 8700k is a z370 CPU and will NOT work in the EVGA x299 dark, the only other Motherboard with a VRM I feel would survive years of production work. The pitfall of x299 is the limited memory channels and limited PCIe lanes that you get with the i7 CPUs is not worth the cost, and therefore I can not suggest getting an x299 system unless you are putting a multi-thousand-dollar i9 CPU on the EVGA x299 dark. i7 on x299 is NOT worth it, and x299, in general, is NOT what I would call affordable in any Galaxy (not just Sextans B or the Milky Way, lol).

AMD R7 2700x (AM4, x470), well, aside from the former two mentioned Motherboards that I would have no doubt about, there really isn't much at all in the more affordable boards. And that isn't even looking at further limited cooling from all the convection ovens advertised as computer cases made the past ten years. The MSI x470 Gaming Pro Carbon may be "ok", however, I have major doubts about its VRM cooling for production workload tasks.

I know the vid was for B350, however, I can not stress that 208 days (5000 hours) for caps over 100c on undercooled VRMs enough. I have experienced it first hand, these caps did not last a full year before three of them blew the tops, with the CPU at Stock (Not even Overclocked).

Both motherboard makers and case makers are guilty of not giving the VRM enough cooling for anything beyond gaming loads. Buildzoid did go over some methods you can do easily to get VRM cooling a tad better, however, it won't be a good solution for some that care about looks or have a low-end board with a VRM not able to keep up with the needs of the CPU, and there are a lot of bad boards out there not meant for anything more than an HTPC workload.

And one of these days, we will have a small chat about what justifies as two phases in a VRM, and for a hint, it's none of the four examples in the above joke diagram, lol. If A, B, and C are a single phase, then what electricity is so different about D that makes it two phases and not B or C, lol.

It looks like some at the Blender foundation have been listening, and if one small thing stays in Blender 2.8, it will be immensely helpful to me. The addition of a navigate around the scene interface controls similar to what Daz Studio and Hexagon have for moving around and zooming in and out. (starting at about 4:52 ish in this vid)

https://youtu.be/hknr7OALhS0?t=292

I tried giving up a function to have a pseudo middle mouse button on my trackball, and it was just a no go. not with either of the upper two buttons, and not with both lower buttons at the same time, and especially not in combination with moving the ball and pressing keyboard buttons at the same time, not with my thumbs. And just using a mouse is excruciating because of my thumbs. I do hate bringing up my thumbs, because it is rare that they get in the way of me doing stuff, However when they do it is very difficult to work around it.

I'm sorry, that is why Blender didn't work for me. Most other apps don't require so much at the same time, to do simple basic things, and I have not felt so limited. If that navigation thing does end up in Blender 2.8, I can not say thanks enough to the Blender team.

I'm sorry I have not posted anything the past few, well, over a week. it's been rather hot to do stuff, and there have been a few non-daz distractions as well. lol.

Like that. I have never suggested getting a GPU with less than 4GB after Iray came to daz, as that was the suggested minimum, so the only low-end GPU I ever considered was the 4GB 384 CUDA core GK208 (not to be confused with the 96 CUDA core GK108) GT730 Zone Edition from Zotec. The instant it was confirmed that the GT1030 would NOT have 4GB it ceased to be considered by me, and is a clear indication that Nvidia either cant make a good low watt card or doesn’t care at all about the low watt segment. They recently came out with a disgrace to the silent PC market called the GT1030, that is not a typo, same name, worse specs, incredibly insulting specs in all honesty. It's so bad that some apps won't even acknowledge it as a discrete graphics card, lol. The new DDR4, that's right, DDR4, not GDDR, and they couldn’t even add more memory to it, same useless 2GB as the old GT1030, is so pathetic that you are in fact better off getting an AMD 2200G APU (the lowest end model) and using the integrated graphics unit in that, or doing it in software emulation on the CPU, rather than use The DDR4 GT1030-Disgrace. The DDR4 GT1030-Disgrace is effectively the Graphics "3D decelerator" of 2018, like the S3 Virge was back in the 90's. Congratulations Nvidia for creating a laughing stock of GPUs on pare with the 7740x CPU for the x299 platform. Meanwhile in other news.

This EPS 12v cap mod didn't quite turn out as impressive looking as I thought it would. I didn't expect the wire to fight me that much, and even tho it has the same amount of capacitors as the EPS12v cap in my FX8350 comp, it is rather underwhelming looking. It turned out to look like its more wire than capacitor, lol. So I'm probably going to look at making that another way, and rearrange the caps and wire to hopefully get it a tad smaller. So, between that and the overwhelming heat the past few days, I didn't get much done at all in daz.

I looked a tad bit at DES Lavander when it wasn't so unbearably hot to have multi-hundred-watt heaters going, and I think I managed to make some progress. Nothing close to the full presentation I was hoping to have a week ago, lol. In short, I only noticed one minor thing, and I haven’t even had a chance to look at DIM to see if an update happened with a fix for that, and it's not like it was overly difficult to just put the Daz Default shader on the Fiber-brows for 3delight.

Matching the color to the hair was a bit of work, tho that isn't really part of the figure. I chatted briefly with one of the PA's and the lack of a Fiber-mesh 3delightt preset was a mistake (a simple one in all honesty), as the other 3ddelight preset does the rest of the figure, body, gens, and lashes. So I was thinking I was doing something wrong as the preset was doing everything except the fiber-mesh eyebrows. Again, simple mistake, and it's not that difficult to go to the Shaders folder, and to the 'ds' folder and select the daz default shader for the fiber-mesh eyebrows as I had done here. It's not like the eyebrows need any of the super fancy stuff the other shaders have, a little bit of Specular/Glossiness with a diffuse color and your good to go, it's fiber-mesh you don't need to fake things with face plant heavy subsurface or time-consuming velvet, lol. There are other thoughts that are mostly good, I just got to get some screen caps and gather my thoughts into something coherent, lol. P.S. Buildzoid VRM shirt design is NOT included in the Sweet Summer outfit, that was my fumbling shirt design overlaying in gimp work.

Spock and Kirk took a look at your 12V CAP layout:

lol, yeah, it's a very 2-dimensional space for a 3-dimensional problem, lol.

A classic case of squeezing higher dimensions into a lower dimensional space. I need to have the wire to each of 8 caps all the same length, or some of them will be exposed to more of the full brunt of the CPU VRM than others.

I don't expect it to be a major prob unless something bad happens to the cans on the motherboard, however going on the safe side of things. By the seat of the pants calculation and a few web searches, them 1uF Polly caps can handle around 3 amps of ripple current, each. The R7 1700 doesn't pull anywhere near the plugs max, however, that is a 24 amp plug. So getting the wire lengths the same to 8 caps is kind of important.

The original Idea I had didn't go quite as well as I thought it would.

I'll think of something. lol. FYI, I really do like that 2-dimensional joke, it's great. It's on par with the DDR4 GT1030-scam (Hardware Unboxed's vid) that's going around, lol.

I should be clear on a few points, as I do know that some games run fine with only 2GB. The minimum for Iray and a lot of other CUDA apps is 4GB, and for a system that is going to be an HTPC or audio workstation, gaming performance is not the number one concern, watts and noise are. For a graphics card that burns less than 55 watts so it can passively cool itself, has 4GB and Iray capable CUDA cores, there really is only one option to date, the 4GB 384 CUDA core GK208 from Zotec. I'm more disappointed that Nvidia has refused to put 4GB on the low watt cards, then the naming scam that has fired up lately over the GT1030 vs GT1030 cards.

one of them times when you are between a rock and a hard place. Out of pure conscience, I must at least acknowledge something as it would be just wrong not for others that purchased or may purchase something expecting something to exist that doesn't. I thought I did my homework before buying anything this month, lol.

I did have a bit of a chat with Silver some time ago, and she had indicated that they were moving away from providing 3delight shadders for future products, and I don't blame them for wanting to get away from making shaders for two polar opposite render engines. At least I can thank ADSI for not cursing me with inverted color space maps that are impossible to work with and have look good (looks scoldingly at Nuka). I'm sure the missing 3DL preset with the listing for it on Vernea's product page is a mistake that sort of sucks, either way. One would be to let down customers that purchased it expecting it to be there by removing the 3delight listing on the store page and telling customers "that was a mistake and tough luck", or ADSI will have to make the shaders for an old product that probably won't have the return on time invested for the work put into making the 3DL presets. I feel bad either way for this. I've already done half the footwork gathering all the maps into a 3delight shader for the renders I was going to make, and am now at the point of adjusting the levels to match the skin tone in the promos. I do have a lot to thank ADSI for the figure despite all that. All the maps are there, All of them, and the maps are not inverted color space, the eyes don't rotate off to some obscure orientation that breaks pose dials, there is a separate dial for the head body and ear shapes, I can at least work with this.

Speaking of that, lol, I just can't win tonight. This is a brand new outfit for generation 8 that apparently has a few spots of difficulty.

That's part of the reason I keep being tempted to just use the older stuff that just worked (Pre V7 Pro-disaster era). OK, trying to stay positive about this (takes a deep breath)

At least there are a lot more adjustment dials to work with on the Party Monster Outfit outfit than another I got recently. This is at least usable in its current condition, and is a very nice looking outfit.

And an utmost thanks, WAY more surface zones to work with for setting up 3DL shaders on instead of being stuck with the included Iray only mats, unlike something, well, a few things I purchased last month.

Speaking of new outfits, I totally missed the launch of the Eastern Elegance, that has a very useful set of dials for ADSI Vernea. That odd crumpled up cloth effect under the chest. Fisty has added a fix for that on the past few outfits that works incredibly well for many figures. (ok, time to finish the color adjustments so I can at least post a workaround set of settings until I can have a chance to ask ADSI how they want to proceed with that 3DL thing.)

ok, I sort of like that for tinting, for use on AoA or Omni shaders the values may need to be adjusted some, tho I feel this is a good start. I can probably get the eyes to not be so dark, that's probably an easy fix... it was.

Helps if the eyes have, well, eyes, lol. A spec strength of nothing and lack of diffuse strength tends to make things look dark, lol. I'm liking how this is starting to look with some minor adjustments. decreased the red a tad on the diffuse, and redused the saturation on the specular color a tad.

FYI, ^ thats without Aiko8 by the way, Just ADSI Vernea by herself.

Sorry, I haven’t posted in a few days, I've been having too much fun playing around with making shirt prints. And other things have been going on as well, in line with the funny shirt prints I've been making. so this is going to be a bit of a multi-angle post as I go over the goings on, lol.

I haven’t forgotten about ADSI Vernea, and I've been subtly hinting that I would like a VRM shirt in black to go with my GN shirt collection, lol.

And Buildzoid has been doing Buildzoid like stuff, so it's kind of only an I would like kind of thing. And that sort of leads to the shirt CB Gwendolin is wearing.

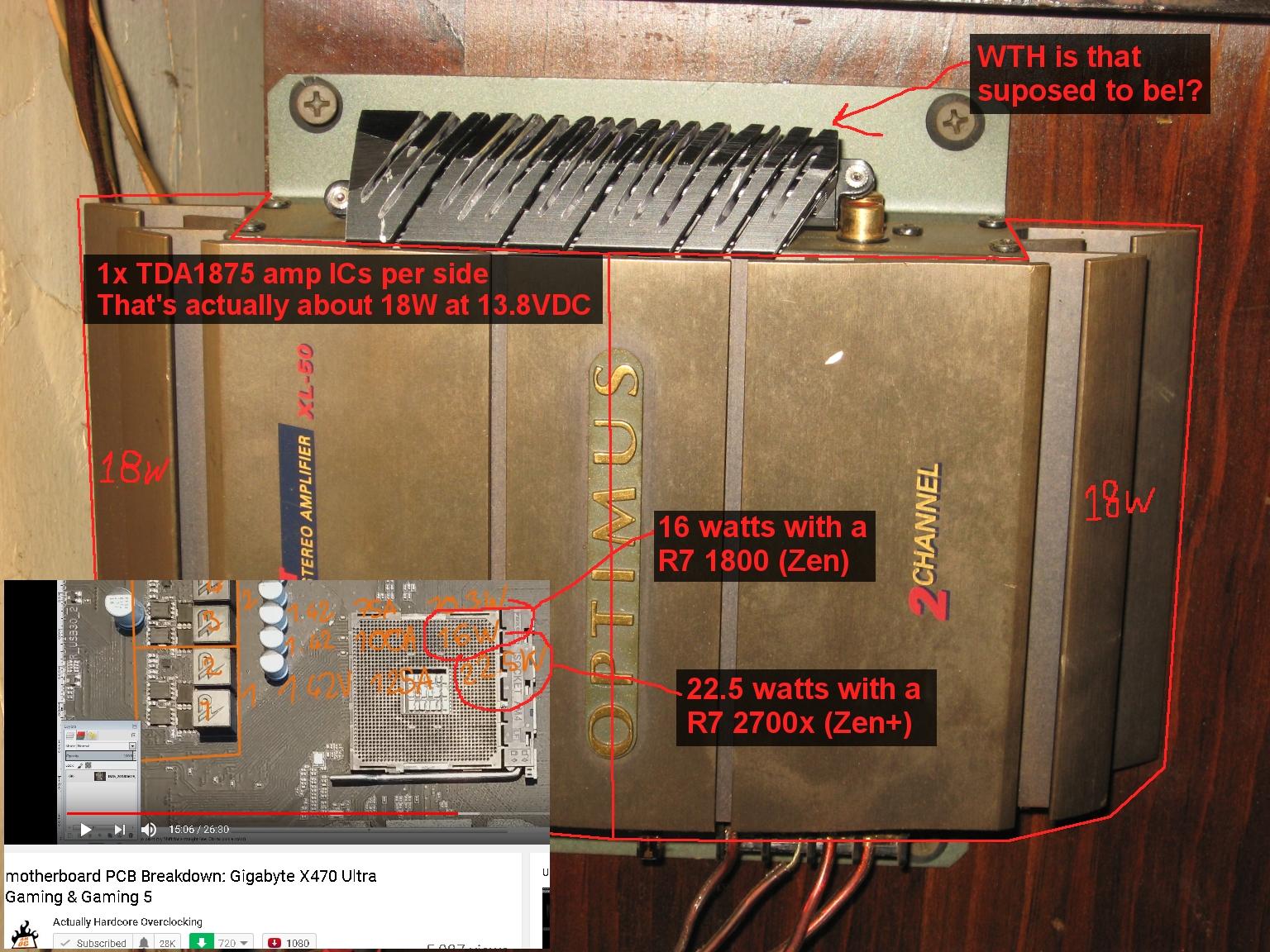

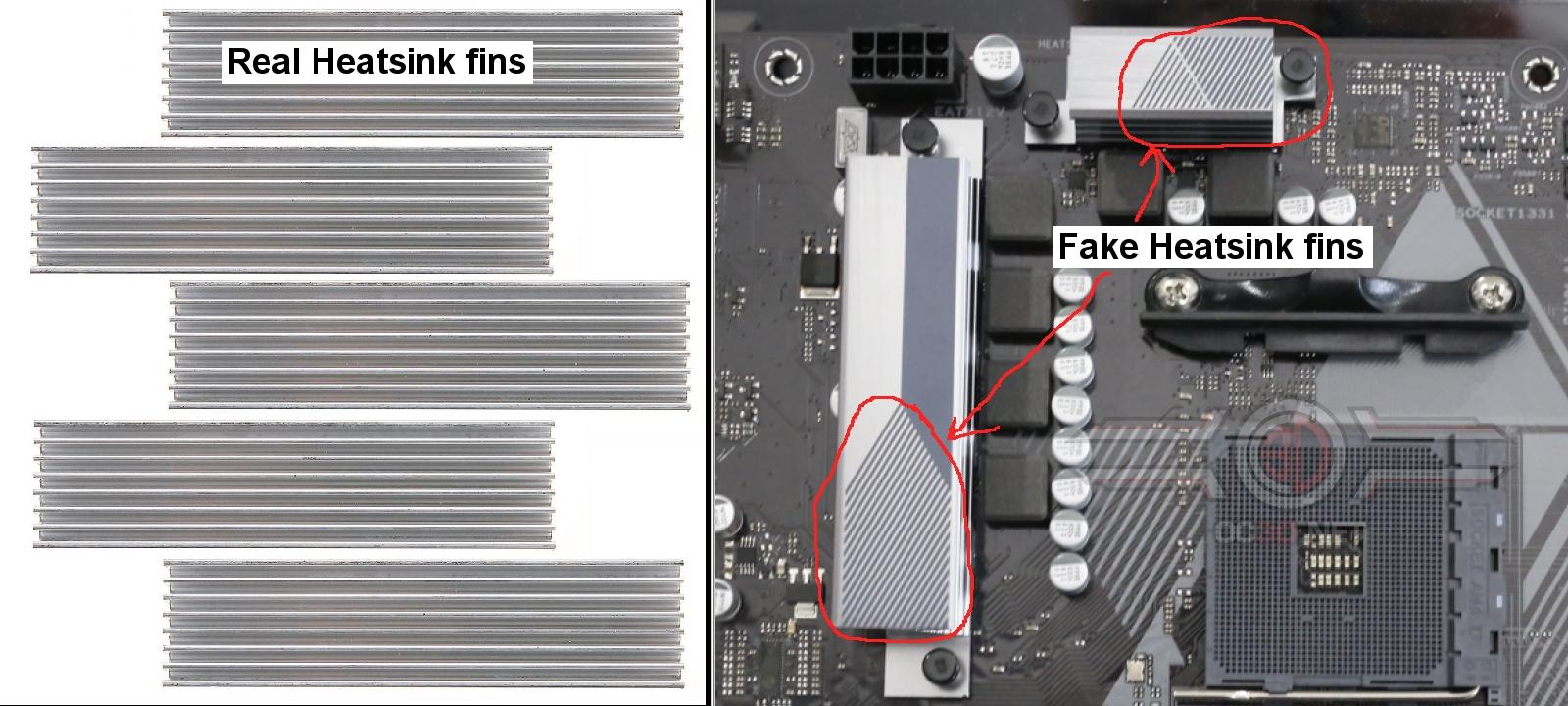

Buildzoid looked at a few B450 motherboards and like me was not impressed with the blocks on the VRM's. And it really looks like most of the VRMs haven’t been changed at all from x370, despite zen+ eating more power, I'm in agreement with Buildzoids thoughts there. It's not like designing a heatsink requires complex trig to figure out that a tiny block with no fins will never dissipate enough heat, it's simple math and much of the work has already been done eons ago. You don't need to look any further than a small car amplifier to see how much surface area it takes to cool a VRM dissipating around 18 watts of heat. and I can say that cuz it's simple math. PSU's are not that different than a VRM with efficiency vs power lost to the efficiency of the unit. if it's only 90% efficient and pushing 150 watts to a device, then it is wasting 15 watts of heat from being 10% inefficient. And that’s for a good modern unit, many older PSUs were in the range of 60% efficient, implying they dissipated 40% of the power in into heat to produce that 60% of power to the computer. 10% is very simple tho, cuz you can just move the decimal point around, and have a good ballpark figure of how much heat needs to be dissipated by a VRM. so if an R7 2700x can chew threw 180 watts doing Blender renders, the VRM will be producing 18 watts of heat (10% of 180 is 18, it's super simple). Now, back to that car amp, well, I have just a car amp that has been neglected behind one of my desks for 8 years now, and it's from the early 90's era before car amp makers started lying through their teeth about watt figures based on some imaginary Sound pressure level in a tiny box the size of a car. This car amp actually is only exaggerating based on the watt capacity of the chips in it, lol. And linear audio amps are almost always 50% efficient, implying they put out the same amount of heat that they deliver to the speakers in watts. an 18 watt amplifier will produce 18 watts of heat. And here we find our first problem with the fashion accessories Motherboard makers have been decorating there VRMs with the past ten years.

There is just no way in hell that tiny VRM fashion accessory will ever dissipate 18 watts of heat, given the size, and more impotently the surface area of the amplifiers cooling fins that are designed to dissipate the same amount of heat on each side of the amp (each channel is making 18 watts of heat in that amp, and each channel is on one side of that massive heatsink). So half of that car amp is needed to dissipate 18 watts of heat, vs that tiny VRM block from a motherboard (sitting on the amp in the photo), This is the disparity many see. There is just no way, the VRM blocks will ever cool a VRM supplying power to a Threadripper that’s consuming over 250 watts making the VRM dissipate around 25 watts. And TR2, as Buildzoid had correctly pointed out, will need way more than 250 watts as even Der8auer tested as well via proxy using a 32 core EPYC CPU, more like 350 watts (that will be around 35 watts of heat produced by that VRM at around 90% efficiency).

The way I see it, B450 is going to be a disaster for production work or OCing, given what I've seen on VRMs so far. And to transition a bit, that car amp is designed to work in a place that doesn’t exactly have the best airflow, kind of like modern computer cases, lol.

I'm sure Jay had done that out of spite and to prove a point, as the multiple radiators were not getting enough airflow, in that case, to keep the computer cool. And I see it over and over again on forums where someone will say there using water cooling, so a computer case with good airflow doesn’t matter to them, then they get the parts and build the new comp, only to discover they do have a cooling issue. And ironically few look at how much fresh air the radiators are getting, they blame the fans or thermal paste, delid the CPU, swap out radiators for bigger ones, etc. Cooling really matters, and how something looks should not be a reason for making something that overheats when it's used.

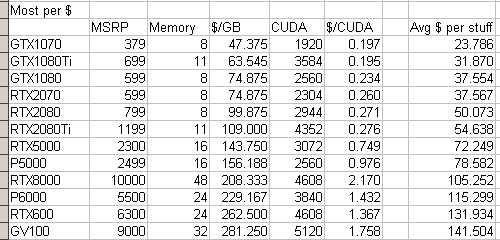

GPUs nearing MSRP, around 'Two Years Later'. It's well past launch date, and MSRP for old tech kind of isn't worth it. Especially with new cards on the horizon. In fact, I would almost say, this generation of cards is the one that has been priced over its value for most of its availability for gamers and anyone that can't just write it off as a business expense. It's like asking inflated price for last years car model even tho this years model is only a month or so away. At this point, it should be under MSRP for old stuff. My wallet remains unavailable for such price stupidity (and that includes memory as well).

A 16GB memory kit (2x8GB) should go for under a hundred dollars, and a 32GB kit (2x16GB) should go for well under 200 dollars (when they were new, and was before things when stupid). It's not even new tech, DDR4 is an ancient relic compared to the RX5, Vega, and GTX10 GPUs.

In other news, I started learning how to use a birthday gift from my brother, and in the photo is the noise on the x370 Gaming 5 from Gigabyte Vcore VRM. In all that is not bad at all, even tho it's only an R7 1700 and not the much more power hungry R7 2700x that I feel many B450 boards are not up to the task of supplying power to. I mentioned before we would have a little chat about what it takes to make a phase for a VRM vs what is between Marketing Wank and straight out Marketing lies.

Rant aside, I feel Buildzoid did go over what is a VRM phase and what is not very well in this vid. Remember that How many phase thing.

Apparently, I forgot a variation in my How many phases funny. Well, It's a long vid, tho if you have the time to glean through the explanation section, is well worth it. I won't go so far as to say it's "required watching" unless your interested about what makes the widgets in your 3D editing rig work, and Buildzoid also has other great vids on how the doohickeys do their stuff, the "in paint" series of how it works.

So that has been what I've been up to the past few days in a nutshell. Oh, just some cleaning and fit-testing of some stuff as well, nothing bad.

All this research and it comes down to randomly sticking household items in your case!

lol, yeah, it is sort of like that isn't it. sticky notes to keep fans from sucking hot exhaust back into the case, diced up plastic coffee cans to guide the air to the stuff that needs the cooling, etc. It's sort of in line with some other chat and something I've noted of late. I really do appreciate the efforts that went into that Grammarly thing, and it has been really helpful despite some flaky text field behavior on web pages, and one other minor thing.

You really need to look closely at what Grammarly is suggesting, as sometimes it is suggesting a change that completely changes the implications of what was typed into something it is not or something that just makes no sense at all, lol.

It has been an interesting week, with lots of stuff going on. I've been eyeballing an idea for a mini-compute box, tho as Buildzoid had pointed out, there really isn't much in the non-full-size board offerings with VRMs that would put up with that kind of workload. And VRMs keep looking worse and worse as others had noted. the old z170 boards had way more VRM phases for the 4 core processors than the newer boards have for the 6 and 8 core CPUs that need way more power. And they all have iffy cooling at best, Most are VRM fashion accessories that some of do nothing at all for cooling, lol.

the VRM at 103c and the VRM block was stone cold from this 9-minute snip-it (simple 5GHz OC with a water loop, not even XOC clocks and volts, and the rad was pulling air across the thing), lol. I am arguably an artist myself, and I take offense by what they put on VRMs. Hell, I could design a better heatsink then them...

oh, I have made better heatsinks already. Another note on that, Intel lists that 8086k CPU as a 95 watt CPU, not a 240+ watt CPU, so the issues go far beyond not comprehending thermal dynamics, lol. CPU makers are lying about how many watts the CPU needs and motherboard makers are not providing there VRMs adequate cooling for what the VRM is able to handle. This is my biggest problem with what motherboard makers are doing. A single phase worth of FETs and inductor can easily cost around ten dollars per set of components strapped on to each PWM signal. A better heatsink that can actually dissipate the heat can cost around the same price as two phases of components. They are literally throwing away money adding more components to a VRM that we will never be able to use to its capacity because they refuse to put an adequate cooler on the thing. A real heatsink would cost less than the components added to make them fake 8 phase VRMs.

ok, Perhaps I was a bit to harsh in the closing of the former post, and I didn't really look into what I spent to make that Amature Cooler that has a lot of ugly rough edges on it. If it was professionally done, it would have much cleaner edges and look a lot better than a cobbled together mess of metal parts. It does have a bit of a Steampunk look to it that I do appreciate, so it will stay on the Gigabyte x370 Gaming 5. First, a few funny Grammarly wrong-suggestions to hopefully lighten the mood some.

Thinking "about" was what I intended, as thinking in line with, Implies that I do not have any differing thoughts at all. I'm not so sure with that "Thinking similar to" one, lol.

"When the VRM can't 'KeepS' the voltage stable enough" since when is that supposed to be plural, lol. OK, so, how much does it cost to get a VRM cooler made. Well, there is the stock metal in blocks or extruded form, then there is the machining to form the final shape via crosscuts or stamping. Well, what did I pay for the stock metal to make the base of that heatsink, less than ten dollars if you consider that I could make two bases from each bar?

And that isn't even bulk, that is a one-off single item, that inherently costs way more than if a motherboard maker ordered a few thousand bars cut to exact length. well, the bar needs to be trimmed down and shaped...

While I don't have prices for each machine cut/stamp this would need to pass through at a real machine shop cuz I did this by hand on my porch with a hacksaw, file, and a Dremel. If I look at What Gigabyte put on the x370 Gaming 5, each cut that is at a different angle probably is another pass through a machine, all cuts at the same angle probably could be done at once in the same machine.

Just looking at the shape, it looks like at least 6 different machines on the assembly line to make all them unique angle cuts in the extruded stock to make that thing. That or six passes through a single machine after adjusting the angle of the cutter for the next cut. Each step on the assembly line adds more money to the cost of the part. I can't tell you exactly what it is for that one, I can say for my heatsink it took a few hours per cut to mark it out, cut it, and deburr it, etc. Mostly because this was not something I was making a few thousand of, and it was the first time making this thing. Well, that was also for just the base to lift the heatsink up over the chokes. The actual heatsinks where not that expensive.

And they were leftovers from another project from my Odroid XU4. so, we are up to what, (7.95x2) for the heatsinks + (13.81/2) for less than half a copper bar = 15.9 + 6.905 = 22.805 USD in parts to make one heatsink. Excluding the spare bit of copper bar that is more than enough to make another one, and shipping and handling that would also scale with quantity orders. I get the feeling, that a one-off extrude and milling to make the original thing that was on the board would cost way more than that, tho in bulk may be closer to the same cost as what I did also in bulk. So, cost alone can not explain why smaller boards and more affordable boards have such horrible coolers, I'm almost thinking that the Fashion accessories may actually cost more than a real heatsink that would allow a small board to keep its cool without the need to double up components to reduce the heat output of the thing. Because not only are they paying for the milled extruded blocks, they are putting metal stickers with designs and even RGB lighting on these things.

Now, in closing, I have a few thoughts about B450 and they are not really for high end Rendering systems. More like a Home entertainment PC or smaller single GPU box. The HTPC has me worried as that is an application where silence is paramount. As for 3D rendering, I have doubts that a second dedicated CUDA rendering/compute card will be supported by the boards.

Now I'm not going to go so far as to say, "I only get the best as everything else is just junk". I know how unhelpful that is for people like me that are on a fixed income, and the idea of getting a 500 USD motherboard just to put a 2400G in for an HTPC is beyond ludicrous, it is simply beyond my budget to do something that stupid in all honesty. What is needed is a B450 mATX board with a VRM cooler that will not throttle the CPU in a low airflow HTPC box. As for x470, well, that will more than likely end up in a CUDA compute box, and will be pounded a lot with high watt workloads, again, giving it a need for a good VRM cooler.

First one is correct grammar, but I don't think your was was wrong either.

Second one got flagged because you wrote "cant" instead of "can't" so it thought it was a noun not a verb.

that is interesting, as I do recall times Grammarly would flag "cant" as being wrong, and I guess sometimes it doesn't and gets confused. As for the "thinking about" thing, I'm not sure myself. it had me thinking for a long time as to how I could have possibly have said that and imply the same thing without getting too wordy, lol.

sorry for the delay, I had been lounging a bit, watching vids, and thinking about stuff. I have an idea to try to describe why temps over 100c are not so good for a product, tho I'll need to sketch up a few things for that. I had also stumbled across a cool 3d editing build that I do sort of like, I would have built it differently myself, tho for what Andrey does it is a nice system and looks very good. At this time I won't say yea or nay to any of the other vids, as I only watched the nontechnical Q&A aside from this cool build vid, the channel Appears to have good content so far. It's most interesting to see that I'm not the only one to have a system that most others that only play computer games scoff at the apparent lack of a need for this or that while saying that we are the less than one percent or minority, lol. I totally get wanting three GTX1080ti cards for CUDA rendering and a forth GTX1070 for just the monitors, something that I've been called into question for even considering, putting two different types of cards into the same comp, and not just plugging the monitors into the more powerful card. I totally get the triple monitor thing as well, because sometimes when a window is full-screen, it's nice to be able to have some desktop on another monitor to have stuff on, and especially for things like youtube that has no windowed fullscreen (it's all or nothing, and even covers part of the vid with them bars at times, lol).

And there is something else mentioned that really perked my interest, that I had considered possible configurations for not too long ago. A dedicated render box, not quite a tinny HTPC, yet not quite a file cabinet workstation sized rig either, just something packed with a lot of computing power and a lot of cooling for that horsepower. I don't even have a 3D sketch to show what is in my mind, because I'm just not convinced that motherboards today could handle that. There wasn't much on them render boxes, and I can understand why. One other thing I thought was cool, was a cool firefly in a jar effect (that's CG) from I guess a few of his vids. (this screen cap from his Q&A ep1 vid)

I had thought about making something kind of like that for my desk. two sets of 3D LED arrays wired up to be a time domain Spectrum analyzer, one for the left the other for the right channel. where the forward most wall of LEDs would be the most reascent spectrum amplitude of the audio playing with older and older as the layers go back into the 3D cube of LEDs. It would be 16wide 8high and 8deep each instead of 8x8x8 as in the pic and vid example.

I never built them, as I figured out rather quickly that something like that would be incredibly difficult for me to make actually work, not to say the parts would cost way more than I was willing to spend on a "for looks" thing. The spectrum analyzer and time domain logic stuff would make the things cost a few hundred dollars each, and the spectrum analyzer part would be so mathematically intensive, that doing it in software would require an equally expensive CPU to run it on, for each one.

ugh, more hot miserable days, and only getting half thoughts drafted out, lol. well, for the moment while I'm fussing with the map for something I'll mention something that has been on the back burner for a very long time. Making maps can be tedious, and normal maps from scratch are even more tedious, lol.

I made the mistake of trying a simple block that wasn't even a power of 8, and now I'm making it again from scratch because of that for a standard 1k to 4k map, lol. Now GIMP is really cool for many things, however, this is the one place that the good old Windows 98se MS Paint really does a better job. Especially when I want a single pixel to be an exact value and the ones around it to remain untouched. That is where ALL the fuzzy brush drawing apps fail miserably. So here I am using GIMP to look at what RGB value I used for each Hypercube face so I can enter that exact value on exact pixels in Win98se MS Paint, lol.

I wanted to try some other shapes, however, it has just been too miserable the past week for that kind of brain twisting thought, lol.

ok, so I had an interesting chat on discord about a vid that Buildzoid came out with regarding 'safe VRM temps' and the life expectancy of capacitors.

Some feel strongly that a consumer board is not meant for 3D rendering or Video editing simply because it can use more than 50% of the CPU for more than a few hours a day . I can sort of understand the point, even tho I still feel that if a motherboard is advertised to support a CPU, it should be able to run that CPU at the CPU's advertised performance level for the expected life of the motherboard, without being told that I can only use the CPU for so many hours a day or only use a small portion of the CPUs potential stock performance

. I can sort of understand the point, even tho I still feel that if a motherboard is advertised to support a CPU, it should be able to run that CPU at the CPU's advertised performance level for the expected life of the motherboard, without being told that I can only use the CPU for so many hours a day or only use a small portion of the CPUs potential stock performance  , lol. And in a way, it relates to something else that I had been looking into for some time. First a fun 3D break tho.

, lol. And in a way, it relates to something else that I had been looking into for some time. First a fun 3D break tho.

I don't think I had done any 3D renders of FWSA Katie even tho I had purchased that back during the holidays. And Fisty did make a really cool Spike thing. ugh, wrong button, lol. ok, experiencing some technical difficulties as well, lol. Time for some coffee and to try this again, lol. OK, there we go, I wonder what was wrong with the other jpg, hu, in any case, TBC (after a cup of morning coffee), lol.

*1, ah, Katie is not an elf, they are ... Ah, ok, a mix of ears, lol. 50% P3D Mylou, 50% Teen Josie '2' ears shape, and 25% Mika8 ears. Wow, lol.

And given that cap vs temp derating curve in BZ's vid, I may have just gotten an even worse impression of 'Gaming' boards with VRMs so bad that most will say you can not expect them to survive being used for heavy rendering task. As if 'Gaming' is somehow supposed to be better than normal everyday PC stuff, lol. In any case.

ok, at the risk of being flooded with unsolicited advice to get this or that motherboard and computer case, even tho what I want to do there isn't anything at this time that can do it. I have already looked around and the stuff just can't do it. However, 3D land is not bound by only products that are available, so I can instead look at the tech and craft a box around what is possible and not be limited by whatever products are on the market today. here is what I have in mind for a fictional character.

Sandy has this empty cube spot on her desk, where the monkey head is, and I want to stuff a tiny box there. I want this box to have a 32GB Tesla V100 card for Iray renders, a good GTX1070 for the 8GB and minimal watt combo, even tho a lower watt 8GB card would have been nicer for that. And a nice 8+Core CPU for other stuff.

OK, perhaps that spot on the desk may be a tad small for all that, however, does it imply that one must get a mini-fridge size computer just for a secondary compute box, I think not.

just a smidgen bigger and it can work, and 4x 120mm intake fans should be more than enough even running at more tolerable noise level RPMs, it need not be a Screaming Banshee.

even with a nice screen on the front, I think this would be a nice little box. Now back to the former lead-in post and thought about available motherboards. To the best of my knowledge few if any 'Consumer grade' boards have enough PCIe lanes to feed a graphics card and a Tesla V100 CUDA compute card. And as has been hinted at by Buildzoid for some time now and me applying that to 24/7 Heavy rendering workloads, there are no VRMs that would put up with that, aside from possibly one board.

Sadly, the 16x slots are not laid out such that one could use two GPUs and a 3rd Storage controller/m.2 card in that board. The VRM is impressive looking, and it looks like it may have enough SATA plugs to work. I still would have preferred the second 16x slot to be one further down allowing the use of more than just two dual slot cards. The other angle is the i9 is stupidly expensive compared to the CPU power you could get with a similarly priced Threadripper, lol. So in the end, this is still a pipe dream, that many would argue a mATX board simply is not meant for this kind of prolonged workload.

to the naysayers, I would argue that it has indeed been done before, and it is not impossible to make a solid small motherboard that can survive years of the CPU being pegged at 100% loads. CRAY had done it once already, and it was Air cooled, no Phasechange or liquid cooling.

Despite the craze of late with disposable boards with VRMs incapable of more than game loads at less than 8 hours a day, something like this should not be impossible to make.

As I was going through some stuff I noticed that there was a lot of updates to stuff, a lot that I haven't even had a chance to look at yet.

One that did catch my eye was one that I recall coming across a few times now, and I would at the least like to say thanks.

It has been so long since gen7 came out, that I simply do not even remember how many tickets fell off the back of the zen desk, got kicked around on the floor, only to be lost somewhere behind the zen desk.

I will confirm that the fix works and Olivette's eyes now work perfectly with the pose controls, Thank you very much, Silver. I can understand if the memo got lost, forgotten, or simply never made it past the floor behind the zen desk, because it has been a Very long time, lol. I just hope that it doesn't take almost two years to fix the eye rotation on another figure from another PA. Speaking of, Let me try to say something nice about another PA and not dwell, lol.

Marcius, I don't know whatever checkboxes were checked or not checked in the ERC-Freeze-Save-Voodoo-thing. The eyes on Kikyou work perfectly with no pose dial breaking eye rotations. The separate head and body dial is greatly appreciated. The 3delight mats look fantastic and do not take bloody ages to pre-compute, and she is very nice looking. Thank you for figuring out whatever it was that broke the eyes on Rapha (alien dial) and not making the same mistake again on Kikyou.

FYI, them are not Kikyou's ears, she does not include elf ears. I used Vernea's ears for her.

ok, having some fun with dForce Sweet Summer Outfit, and some fooling around with shirt designs and elf ear combos. Sa Sewa far left is the only one that includes ears, the rest are not elves, before I started spinning dials (AKA they do not include Elf ears).

Seeing as I got a couple of more DES figures I figure I could bring back Rosalind in the 2nd gen TR for Buildzoid shirt. As for the other three, lol.

left is DES Lustra in the "Don't get Excited, it's not Ln2" shirt. The middle is Des Apryl in the "a junk VRM + RGB is still a junk VRM" shirt. and right is Kikyou in the Darth-Buildzoid "Let the OC flow through you" shirt, lol. Sadly, only the VRM nuke (in white instead of the black as shown) shirt Sewa is wearing can actually be ordered at this time, the others are just for fun designs.

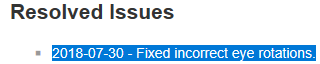

I can understand how some would say "Heatsinks are ugly" after what I've seen over the past ten years and some. Even I find some of them repulsive looking, lack of functionality aside, some are ugly. There is the one designed by comity that has multiple different looking things all mashed together onto the one board, that results in things getting in the way of other stuff. And in one case, if the board was mounted upside down with the PCI slots at the top of the computer case, the coolant in the heat-pipe would collect down at the CPU's VRM area with not enough wicking to bring the coolant back up to the chipset, and the chipset paid the price of that designed by committee, lol. Ironically, a simple 9mm tall 40x40mm pin-fin heatsink from Aavid/Boyd would have saved the chipset, however "the committee" decided the chipset needed a copper plate decal on top of the chipset to 'look cool' I guess, lol. The clash of drastically different heatsinks on the board did look rather repulsive to me.

The Decibel War VRM cooler, well. I will admit the pipes and unicorn vomit collard wires from the VRM blocks are a bit of a clash. As for function, well it comes down to manufacturers putting the absolute minimum cooler they can get away with on there product, and by the time it ends up in a house that is more than likely over 20c, the fan needs to spin at max speed just to keep things from turning into lava. That's why Blower style cards have such a bad rap, a 2 slot cooler simply is not going to keep more than 110 watts of heat source under 60c with room temps over 20c, and it is exasperated by most computer cases that have horrible airflow for comps that burn more than 200 watts. lol. The others, well, I mostly dislike how long the path is between the chips producing the heat and the tips of the cooling fins, I sort of doubt that most of the fin length is actually doing much cooling. And there is the other one that has become more common today, the heat-sink that deflects air away from the part of the heat-sink that would otherwise be really efective in cooling things down, lol.

Since then, it has become common to essentially use one of two rather ineffective coolers. One is hiding the fins where they are not visible, effectively putting them where there will be little to no fresh air to cool things. The other is to just not have fins at all, and something that has the absolute minimum surface area is more Thermal mass rather than Heatsink, lol.

After seeing what has been put on motherboards, I can understand why so many are opposed to something having 'visible cooling fins', or more correctly feel that Heatsinks are ugly. There is a fine line between what is a heatsink, and what is more a work of art, and the past decade and change have been more art than functionality. Real heatsinks may have curves and bends, however, it won't have miscellaneous things hanging off of it blocking airflow, or long winding thin paths of metal the heat has to travel through to get to the tips of the cooling fins. In all honesty, a true pure function heatsink does not look that drastically different from the featureless blocks of metal they put on VRMs today.

I feel there needs to be a bit of understanding on both sides. People need to understand that a computer will produce heat, and will need to get rid of that heat or it will die, this is just the way electronics have always been, hot things need heatsinks. It sort of makes the Tempered Glass craze sort of funny, as people would get a Tempered Glass computer case to show off the guts of the computer, that they think looks ugly because there are heatsinks on it, lol. Motherboard makers need to stop trying to make a fashion statement with the VRMs, and come up with something that can actually keep the VRM cool, and maybe even give people an option of style. There are honestly so many ways to make a simple extruded heatsink that can actually cool and look good at the same time, without needing a 40mm fan strapped to it, sounding like a jet taking off in your house, lol.

Rave - "a fish has more fins than that heatsink" (AHOC Discord) in any case.

in any case.

It may take time tho, as Marketing and management appear to be hell-bent on eliminating any hint of the VRM running cool, and for them, capacitors that die sooner than later is just potential sales of a new product I guess.

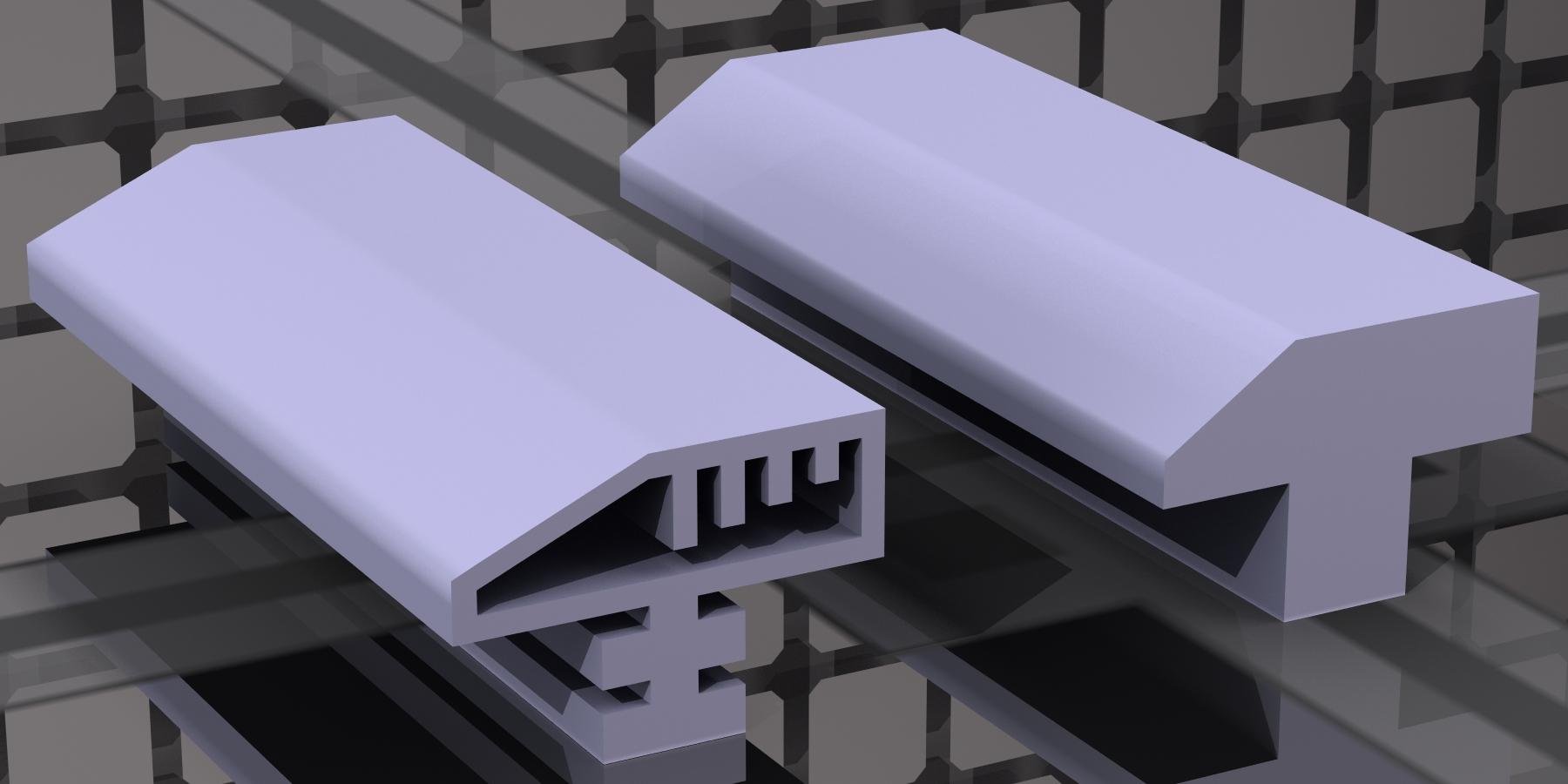

I will continue to poke and prod, along with Buildzoid and others, and play with making my own heatsinks. FYI, them 4 blocks I made in hexagon that Fwsa Sandi is looking at in the last two renders, are based on the same overall dimentions of the Vcore block that was on the x370 gaming5.

I should preface this by pointing out that the website on the right of a screen cap is only using the diagram to show what fans do for cooling a computer, and they do that well enough. My point is that the design of modern stuff is still being made to that archaic layout that was meant for an entire computer that uses less power then most modern graphics cards consume by themselves.

I look at the few VRM blocks that have anything resembling fins if only a groove or few cut into the VRM block, and can only guess it was meant to only be used with the stock lintel downdraft CPU cooler in the archaic first generation ATX form factor.

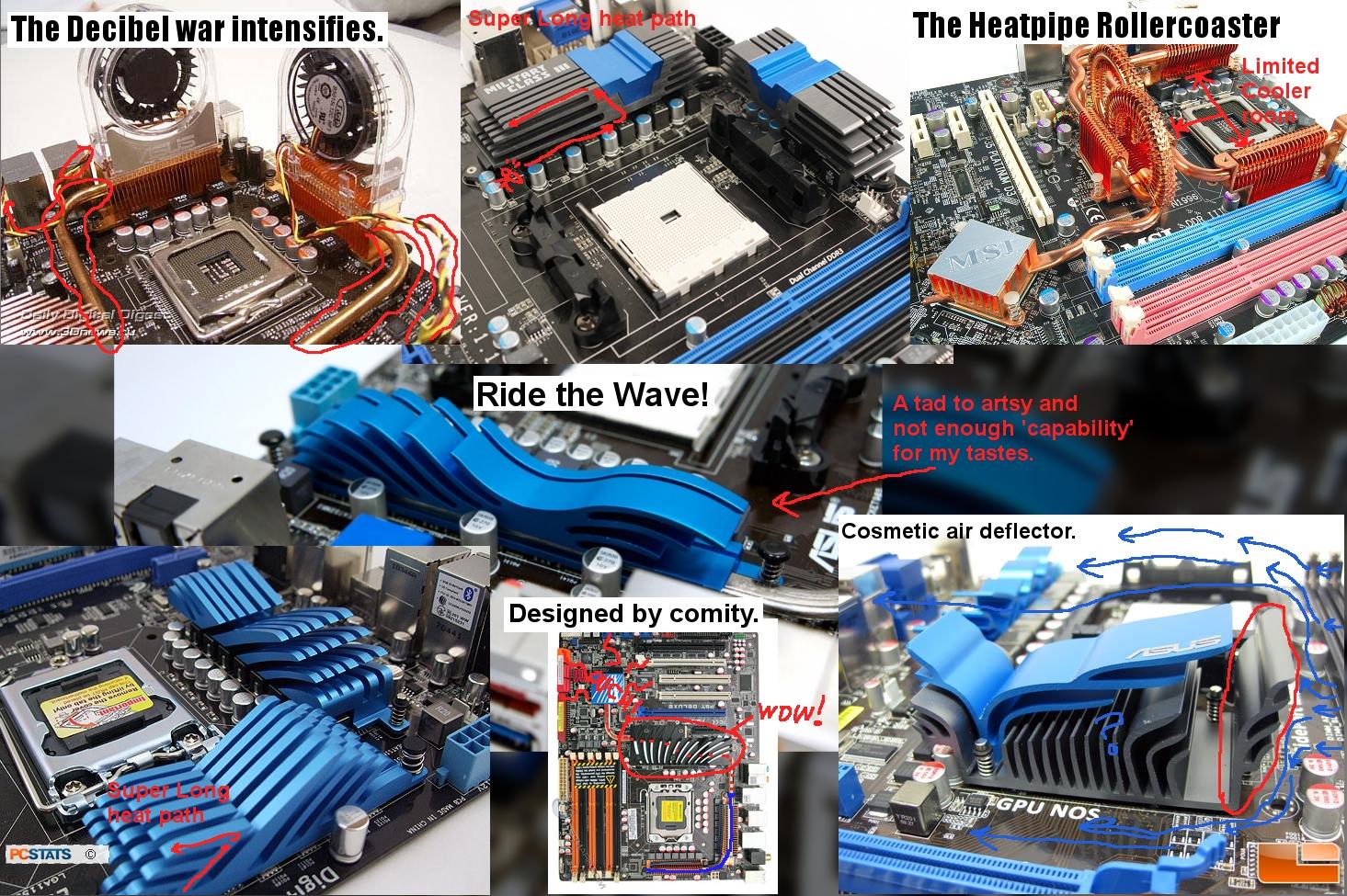

It has been a very long time since power supplies were above the motherboard in a computer, and a tower cooler or CLC is not going to force air down at the motherboard around the VRMs. In fact, most of the coolers are going to guide air up away from the VRM block.

The rear fan may not help matters either, as it effectively moves the rear exhaust further up and closer to in front of one of the VRM blocks, effectively bypassing it almost entirely.

And a CLC pump block combo is a lot taller than most VRM blocks, so airflow will be further pushed away from the VRM. From this alone, it is clear the VRM cooler is at a major disadvantage with anything other than a stock CPU cooler, and we know how lacking the stock cooler can be for the CPU. So most will put a tower cooler or CLC on the thing. And most cases do offer at least mounts on the top of the case for fans, even if adequate room for the fans was not accounted for by the case maker, CLC clearance being even more of a lacking commodity in modern cases.

To nip this in the bud, I do not feel that the forward most fan mount should be in use, or even open to exhaust air that has no cooled anything, unless it is on a 3 fan rad in the top of the case. allowing air to exhaust at the front of the case is literally starving the motherboard of air to cool the chipset, VRM and the rest of the stuff on the motherboard, including the Graphics card. It is better to block off the forward fan slot (with anything, sticky notes, cardboard, construction paper, anything) and let the air at least get back to the motherboard before venting it out of the case.

Even a fan in front of a tower cooler, will only decrease the amount of air that the cooler fan can pull in to cool the CPU.

so the further back the top fans are, the more likely the air can actually get through the CPU cooler, and cool the motherboard.

As for rads, well, assuming the case has room at all for them, and is not just sliding the fans over toward the side panel, at least the air is cooling the rad that is via liquid cooling CPU and/or GPU.

it would be nice if more cases gave the extra 55mm above the motherboard for a rad to actually fit, and allow the intake fans to pull in air that is closer to the motherboard rather than cooling the Tempered glass side panel, lol.

So, given that, it looks like the blocks should be made to have airflow across the top of them from the CPU socket side or air flowing along the length of the VRM cooler. And unless computer cases change drastically from current trends, the airflow will not be stellar at the VRM coolers. Now I attempted to bend over the fins on one block to ketch the air from one of the two directions, however, I ran into a brain cramp just making the mesh do what I had in mind. So that is still in the works, and so much other stuff has happened the past week that I have only had time to think about how to word the posts for. Much to be doings, not much time.

a bit of a side ramble as I have morning coffee. I'm not sure why for some time now the page refresh thing has been broken, and sometimes Grammerlyneedds the page refreshed to start working, and the refresh has been broken for some time. I have not gotten image upload preview thumbs when composing a post, and that makes it a tad difficult to keep track of what images had been uploaded yet or not. And that refresh thing appears to get stuck on the stop loading page thing that does nothing when it's clicked. I'm not sure if Daz is trying to artificially inflate page views, or if something is not agreeing with Firefox on this site alone (Nothing else is broken like this).

As for other things, I've had this nagging eh feeling about the test chamber for a very long time. I initially set up a displacement map to add depth to the features of the floor tiles, however even in 3delight that displacement thing can break easily as soon as you ask it to make features of substantial size, like the one inch deep seams between the floor tiles, lol. I ended up ditching bump and displacement entirely in favor of just surface optical/color maps for render performance reasons. Even with a meager 256x256 pixel tile size, it looks ok, however, it could look a hell of a lot better for close up things, case and point in the former post.

I would love to just do the floor in geometry, however, there are a few limitations with doing it that way. One being an ease of use thing, If I need the test chamber bigger, it's easy to just rescale the floor and set the texture size of the floor tiling appropriately. With geometry, to the best of my knowledge, there is no easy way to just arbitrarily tile geometry with a set scaling for a floor. If I want to scale up the room from 40x40 foot to say 120x120 feet, I would need to duplicate the floor, then try to place it in a metric workspace to be on a proper British imperial spacing, Irrational numbers don't mix well with computers, lol.

I guess I could try to make the tail a 30cm size instead of 12 inches, however, that produces it's own can of worms, especially for me. I've yet to get a metric tape measure (I simply have not had the money to order one), and my mental grasp of metric is limited to what my micrometer will open up to (15cm). Fitting that into a 40x40 foot room would be a major pain, not to say the geometry requirements. I am painfully aware that many on this forum are not major film special effects companies that can just write off a multi-thousand dollar RTX8000 card, so the meager 4GB limit is still a major limiting factor for making this test chamber in geometry for Iray, not that I ever got the lights working for Iray yet (another rant for another time, lol). So as good as I think the floor would look made with geometry, making it will be insane at best.

The 40x40 foot room would require no less than one thousand and six hundred individual geometry tiles glued together one point at a time. I don't even know how much memory that would take up because I only made the one example tile so far. lol. It would be nice, and at the same time, I'm not sure how useful it would be for a test chamber.

The walls being another story entirely as there far more complex in reality, lol. I may just leave this on the back burner as I go back to looking at VRM coolers. So many ideas, so little time, lol.

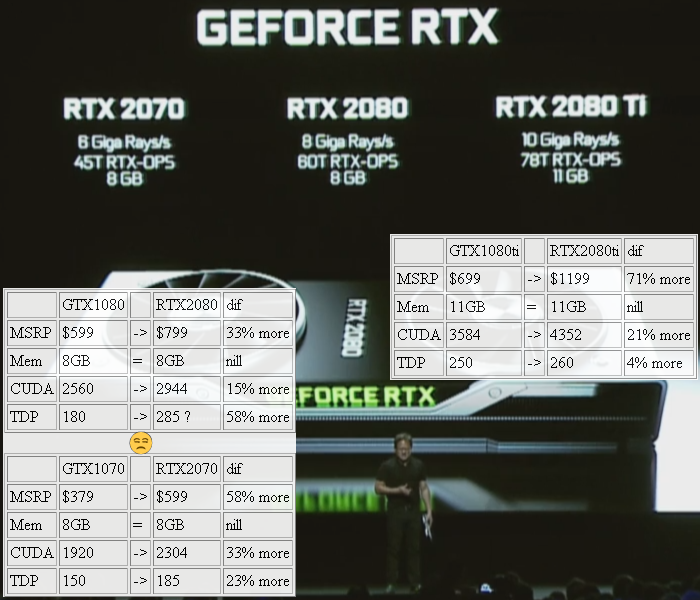

I have a similar feeling about the new RTX lineup, as it really doesn't look like a major upgrade from the 8GB and 11GB GTX10 cards. And last time, it took a few months after the GTX10 launch before Daz Studio even supported the Pascal GPUs in Iray. So I would advise against preorders without benchmarks, unless you really want a card you will not be able to use till drivers and render engine plugins are made for the thing. And given the memory limits, it really looks like a sidegrade at best right now anyway. Also, that Glorius Raytrace thing that's been a buzz around the RTX cards, is not explicitly more ray trace calculations, it's a new filter that lets the card use fewer rays to paint a surface. Again, not something that sounds like a major upgrade for Iray, so I have a lot of doubts the new RTX cards are any better at all for Iray.

What about instancing the tiles? How does Iray handle that?

an educated guess, based on how bump and displacement maps don't work so well in Iray, and remembering something vague about AM having major difficulty getting LAMH working, I really doubt it will be as simple as scaling and setting a shader tiling number. I've never really fussed with instancing outside of the Jurassic forest scene in 3DL, and even that was a bit finicky (it only worked with specific Daz Studio Versions). And it also comes back to try to place it in a metric workspace to be on a proper British imperial spacing, Irrational numbers don't mix well with computers, lol. It only adds a lot more work, than I feel would be worth it.

Oh, and there was the "can't see that tree" in front of the cameras in the view field thing, lol. Only the original instancing thing, is visible in the view field, making placing stuff around the instanced copies a bit of a PITA.

That must've been just the way that product was set up. By default all instances are visible.

Just how much precision do you want? An inch is 2.54 cm in everyday use. I can't see any gaps between 1 inch cube instances when I translate them one by one by 2.54 cm. And DS can do the math for you, like you input 2.54*4, *5 etc in the numerical field (here I did this in Z translate) and it calculates everything right away.

Or you could try this free plugin (works fine with instances, I just checked)

http://widdershinsstudio.uk/widdershins-ststacker-plugin-for-daz-studioacker-plugin-for-daz-studio/

ok, the one in the link looks like it has an easy to use simple click interface tool. In all honesty, I sort of want to just use one or the other system, without multiplying and adding multiple times to get the XYZ for the copy of something. Daz is by default metric, and the conversion by its self isn't too bad, it's the extra step on top of adding x to the former total and then converting it all that gets a bit tedious.

Tho looking at the test chamber last week, I did start to wonder if in non-US areas of the world if there is a nice round number tile size. In some areas, there is normal 12-inch or 8-inch tials or 6-inch, and if that is just left in inches it's simple maths that I don't need to pull out the calc for. in CM that just becomes a mess rapidly for placing tiles starting from world center, lol. Seeing as so many others have said that metric is just 'easier' in multiples of ten, I would guess they don't want to be adding up irrational numbers all the time, like me, lol. 12 inches + 12 inches is 24 inches, it's simple maths. Whereas 30.48 + 30.48, well now I need to grab the calc because that involves decimal points and a lot more digits than my pore little mind can juggle all at once (60 point... Brain melts, lol). And to be fair, juggling 2x4's isn't any better, because they end up being 1.5 x 3.5 inches after being planed and sanded at the mill, so figuring out how many will fit in a given volume is a bit of a brain twist.

As for the gap between tiles, that would only matter if your really close to the floor and doing extreme close-ups, and it may not matter at all for most. I don't think any light leakage would show up in a render from a meter off the floor looking out across the room.

Now if only it would get below 32c, so 'using' the comp won't roast me out of the house, lol.

ok, it's been hot, and all I've managed to do is look around at tech. it's been too hot to try to extrude pins for a VRM heatsink in Hexagon, and way too hot to have the R7 running playing with instances. My utmost apologies for not looking into that stuff yet. To be fair, I have yet to even listen to the rest of Thrawn Alliances, that I had planned to do last night.

There is a couple of things that I have been thinking about, and trying to decide if it is even relevant to Daz3D. One of lesser importance is whether or not Intel will start using solder Thermal Interfaces in their CPUs, or continue to use the established Thermal Sludge. The other being the Tensor cores on the new RTX cards vs Iray.

Well, as for the TIM, I remember back before the 8700k launched, that many were saying that Intel would 'need' to use solder because of the extra heat from the extra two cores in the 6 core i7 CPU. I think it was fueled by wishful thinking more than thermal limits. Now there is a rumor that Intel will use solder on the upcoming i9 9900k, because it also has 2 more cores than the 8700k, lol. I don't know for sure if Intel will buckle to peoples wishes for a cooler and quieter computer, I do know for sure that as far as TJmax is concerned, the thermal sludge is working perfectly fine on Xeons with way more cores than the 8700k or upcoming i9 series. I will name the E5-2699 V4 from 2016, that has a 145 watt TDP, runs at a modest 2.2GHz, with a chin dropping twenty-two cores with forty-four threads, and costs a budget shocking four thousand dollars (4115usd MSRP). I would think at that price, Intel would do everything they could to keep the customer happy, including soldering the IHS to the die for the best thermal performance, if it was necessary for the 22 core CPU. And it does not look like they did, the E5-2699 V4 appears to be using thermal paste to the best of my knowledge. The i9 9900k is rumored to be a 95 watt part, 95 watts is a far cry from 145 watts. I will conclude from that, and the i9-7900X, that also does not appear to be soldered, that TDP alone is not a motivating factor for Intel to solder the IHS to the die of the upcoming i9 and i7 CPUs. If anything, it's to check a box on the marketing departments list of "Why it's better" promotional points, If they use solder. It will be nice, I will not hold my breath on that, given the past decade of Intel CPUs that have not used solder thermal interfaces.

As for soldering some dies, and not others, well, the back of the wafer would need to be treated differently for each process, and it would be a waste to gold plate dies that are not getting soldered. Also, the lower end intel chips are the bigger one with cores disabled due to variations from edge to edge of the wafer the chips came from. So the backs of all of them will all be treated the same before the wafer was cut and tested for bad cores and binned into various models of CPUs. It is not really practical under those circumstances to only solder some chips to the IHS and not soldered others from the same wafer. I'm not saying that you cant slap thermal paste on the back of a chip plated in gold intended to be soldered to the IHS, I just don't think it's reasonable to waste gold back treated wafers for that. It's not like Der8auer didn't go out of the way to try to solder the IHS to the silicon oxide treated chip that wasn't intended to be soldered, lol. From a selling stuff point of view, it just doesn't make sense to if the goal is to make a profit. They all are soldered, or none of them from the same wafer usually.

I would instead, chose a CPU based on benchmarks when they come out, and not hold any breathtaking expectations like a paramount shift in the way Intel does things. On to RTX, well, that's going to be a long one, so perhaps it's own post.

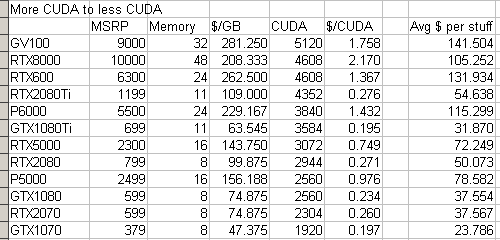

The new RTX cards look nifty at first, however, there has been a lot of speculation that I do not feel warrants the claims that I have seen by some. The new Raytracing thing, is only going to work for games that are programmed or patched to use it. It will not magically make older applications that do not already have Tensor core support any better, outside of the CUDA core counts. As for the Cuda core counts, it is not that impressive compared to the hike in cost over GTX10 cards.

The increase in price is Stupid for what you are getting I think, based on an assumption that someday in the future programs may eventually make use of the extra stuff that is not CUDA cores in the Turing chips. As of today, the only programs in the wild that make use of Tensor cores is AI and Deep-Learning scientific applications. If you are using Blender, Adobe Premiere, Iray, Electronic Coin stuff, or even OpenGL desktop applications, None of it has support for Tensor cores yet. It all runs on SM units and CUDA cores. So the majority of what you paid for, you simply will not be able to use until the applications get updated to take advantage of the stuff, 'If' it ever is. It only took the Adobe Premiere development team ten years to update the video encoding system to take advantage of the iGPU that had been in Intell CPUs since the Core 2 Duo days, and Premiere still can't fully use all the cores in a high core count CPU. I Do not feel an investment in an RTX graphics card now, will be of any value ten years from now, if it takes that long to figure out how to leverage RT and Tensor cores for video or 3D rendering, as by then GPUs will be significantly faster for much less money then what Nvidia is offering today in the RTX20 line.

So what is Nvidia offering? In all honesty, I agree with some others on the Gamers Nexus Discord, that the RTX20 is an early adopter card. For what little has been disclosed about how Nvidia's raytracing is now able to 'Almost' achieve real-time ray trace rendering. First and foremost, is a point that was mentioned in an interview with Tom Petersen by Steve at gamers nexus, the RTX tensor cores are used for a filter that fills in the gaps between ray trace samples to reduce the number of rays needed to render a scene. The net result is a filter that reduces the computing power needed to render a scene. What we do not know is if this new filter needs a ray trace sample every other pixel in a render, or every tenth pixel in a render. None the less, it is not raytracing every single pixel, unlike 3delight or Cycles or Renderman, etc. So the claimed 10x performance is probably based on what amount of raytracing would need to be done without the DLSS tensor filter, akin to car amplifier watts based on Perceived Sound Pressure Level in a small car rather than actual amps and volts going to the speakers. Because of that, I have a feeling that the actual compute capacity of the RT and Tensor cores on RTX20 cards is not as much as some may think. If they can be used to enhance the render performance of normal render engines without sacrificing the movie quality of the render, that would be nice. I would not expect movie quality renders at 24FPS at 8k res out of the RTX20 cards. Better, Faster, but I don't think realtime production renders will happen yet. A lower quality preview window using RTX, would be cool, especially if it does not slam the computer to a halt for every time the preview updates.

Unless Nvidia comes out with a new version of Iray that drops seamlessly into existing versions of Daz Studio that utilizes the Tensor and RT cores, the new RTX cards do nothing for Iray performance outside of the marginal increase in CUDA cores. There was a small note mentioned almost in passing during the RTX20 launch event, that may or may not make any significant difference to CUDA performance. Apparently, the new Turing CUDA cores can now do two kinds of operations in parallel in each CUDA core. Whether or not that ends up doing anything meaningful for current CUDA apps is not known yet, and being a consumer card, I would not be surprised if memory bandwidth limits the benefits of such an architectural improvement in the CUDA cores. AKA, don't expect miracles, and wait for Benchmarks to determine if the hyke in price is even worth it. I suspect, like last time with the GTX10 cards, Iray support for the new cards will not happen for a few months well after they had been on store shelves anyway.

(edit 28sep2018) ok, I still feel the price is not worth it, however, it does look like I was wrong in assuming the RTX20 cards would not get Iray/CUDA drivers until a few more months after launch. Apparently Daz3d is working on something, and I am happy to eat my words on that. I still feel the price is a bit steep for not getting 12GB on the RTX2080ti at least, and the same for not having 11GB on the RTX2080non-ti. I am ecstatic that Daz has figured out something for the cards to not be expensive Bricks, thanks daz.

Seriously, DS can handle that. Seeing Rob W. mention this neat little feature was a revelation. Check out the image if you haven't tried it yet.

And as for metric tiles, well you could make it any size obviously. If you want some statistics, one Russian site lists these common sizes:

Generic ceramic floor tile:

Ceramic granite floor tile (also cm):